AUTOMATION HISTORY

857

Total Articles Scraped

1480

Total Images Extracted

Scraped Articles

New Automation| Action | Title | URL | Images | Scraped At | Status |

|---|---|---|---|---|---|

| Computer Vision Startup Tractable Hits $1 Billion Valuation - Business … | https://www.businessinsider.com/compute… | 1 | Dec 26, 2025 00:03 | active | |

Computer Vision Startup Tractable Hits $1 Billion Valuation - Business InsiderURL: https://www.businessinsider.com/computer-vision-startup-tractable-1-billion-valuation-2021-6 Description: Tractable uses artificial intelligence to help insurers assess car damage and estimate repair costs. Content:

Tractable says it is the UK's first computer vision unicorn, after raising a $60 million in fresh funding at a valuation above $1 billion. The round was led by existing backers Insight Partners and Georgian. Company president Adrien Cohen said the firm had grown revenue to eight figures, thanks to the startup landing major insurers as clients in the last year. The London startup, founded in 2014, has primarily applied computer vision capabilities to assess car damage after an accident. It partners with insurers to help make an initial assessment and estimate repair costs, something which can reduce the time a car spends in the body shop. The firm has trained its algorithms on scores of photos of damaged cars, and claims the system is as accurate as a human. Tractable was cofounded by computer scientist and former hedge fund quant Alex Dalyac, machine learning specialist Razvan Ranca, and ex-Lazada exec Adrien Cohen. "Reaching this milestone is not important, per se, but it's what it says about the impact and scale of our technology, the validation of reaching this scale," Cohen said of the firm's unicorn status. The startup counts around 20 insurance clients across the US, Europe, and Asia, including Berkshire Hathaway affiliate Geico. Though initially specializing in auto repair assessments and estimates, the firm is now expanding into analyzing property damage and even car purchasing. "We're going to go deeper, we think our AI can deal with cases where you want to inspect a vehicle's condition, not just in an accident, so when you purchase, sell, or lease" said Cohen. "All these events, where you can accelerate the process by understanding the vehicle condition from photos." In theory, the platform could partner with a used-car platform like Cazoo to assess the condition of a car placed for sale. Cohen said auto rental firms and auto manufacturers are also potential clients. Asked about revenue growth, Cohen said the firm was privately held and would not reveal specific numbers. "It's an 8-figure revenue [number], with 600% growth in the past 24 months," he said, adding that the firm had only raised $55 million in outside capital prior to the new round. Tractable is one of a wave of startups benefiting from the maturation of computer vision. According to this year's edition of the annual AI Index, collated with Stanford University, computer vision is becoming increasingly "industrialized." Alessio Petrozziello, machine learning research engineer at London data extraction startup Evolution AI, says that more broadly computer vision has some hurdles to clear before it goes fully mainstream. "There's certainly a push to commercialize these models, but it's been clear they are not at the level where you can [fully] rely on them," he said. "For example for a self-driving car, it can't make any mistakes, certainly no more than a human." Apart from accuracy, he added, there's the issue of responsibility. "You use a model, and the model makes a mistake, who's responsible? There isn't a clear-cut answer." Eleonora Ferrero, director of operations at Evolution AI, added that success for startups like Tractable was as much about execution as the fundamental computer vision tech. "Their go-to-market was partnerships with key insurance companies that provided data, it's an advantage," she said, adding that Tractable had been smart to identify something that insurers sought — increased operational efficiency. Karen Burns, founder of computer vision platform Fyma, said adoption depended on clients being ready for the tech. Fyma's platform, trained on anonymized data, analyzes what's going on in a physical space — whether that's a firm tracking the movements of its autonomous robots for safety; or a retailer measuring footfall. "Before you can adopt AI, you have to go through a big transformation," she said. Tractable's Cohen agreed, saying that the firm had relied on the quality of its AI development but also selling the usefulness of AI to enterprise clients. "A big playbook we've cracked is how to deploy and capture the value of artificial intelligence in an enterprise context," he said. "This is very challenging, and something we've had to figure out." Jump to

Images (1):

|

|||||

| AI In Computer Vision Market worth $45.7 billion by 2028 … | https://www.prnewswire.com/news-release… | 1 | Dec 26, 2025 00:03 | active | |

AI In Computer Vision Market worth $45.7 billion by 2028 - Exclusive Report by MarketsandMarkets™Description: /PRNewswire/ -- The global AI in computer vision market is expected to be valued at USD 17.2 billion in 2023 and is projected to reach USD 45.7 billion by... Content:

Searching for your content... In-Language News Contact Us 888-776-0942 from 8 AM - 10 PM ET Feb 27, 2023, 11:30 ET Share this article CHICAGO, Feb. 27, 2023 /PRNewswire/ -- The global AI in computer vision market is expected to be valued at USD 17.2 billion in 2023 and is projected to reach USD 45.7 billion by 2028; it is expected to grow at a CAGR of 21.5% from 2023 to 2028 according to a new report by MarketsandMarkets™. Advancements in deep learning algorithms increased data availability, faster and cheaper computing power, and advancements in hardware technology like GPU and TPU are the driving factors of the market. Download PDF Brochure: https://www.marketsandmarkets.com/pdfdownloadNew.asp?id=141658064 Browse in-depth TOC on "AI In Computer Vision Market" 142 – Tables60 – Figures255 – Pages Several trends and disruptions are impacting customer businesses in Al computer vision; advancements in deep learning; deep learning is a subfield of machine learning that has revolutionized the field of Al computer vision. With the development of deep learning algorithms and the availability of large datasets, Al computer vision is becoming increasingly accurate and effective. Increased use of edge computing is a distributed computing paradigm that involves processing data close to the source of data rather than sending it to a central location for processing. This trend is becoming increasingly popular in Al computer vision as it enables real-time processing and reduces latency. With the growing popularity of Al computer vision, there is increasing competition in the market. Companies seek ways to differentiate their offerings and stand out by offering innovative and unique solutions. Increasing use of AI computer vision in autonomous systems The increasing use of AI computer vision in autonomous systems, such as self-driving cars, drones, and robots, leads to new and innovative applications. This is because AI computer vision technology allows these systems to perceive and understand their environments, making it possible for them to make decisions and act accordingly. AI computer vision is a critical component in the development of self-driving cars. AI algorithms can be used to process the data from cameras and sensors installed in the car to identify objects, such as pedestrians, other vehicles, road signs, and traffic signals, in real-time. This allows the car to make decisions on how to react to its environment and drive safely without the need for human intervention. GPU is expected to hold the highest CAGR for the hardware segment during the forecast period. In the consumer market, graphic processing units (GPUs) are widely used in computing systems. These processors are preferred over central processing units (CPUs), as the former can handle a more complex set of data with greater efficiency. Graphic processing units (GPUs) are mainly used for 3D applications, such as computer games and 3D authorizing software. The major companies that provide processors are NVIDIA (US), Qualcomm (US), and Intel (US). In real-time, Nvidia GPUs are used in industrial inspection systems to process and analyze image data from cameras and other sensors. Several companies in the industrial inspection space use Nvidias Jetson platform to perform AI vision tasks such as image classification, image segmentation, object detection, and more. In NVIDIA Jetson 256-core, NVIDIA Pascal GPU architecture with 256 NVIDIA CUDA cores is used for high scalability and performance. Request Sample Pages: https://www.marketsandmarkets.com/requestsampleNew.asp?id=141658064 AI in the computer vision market in North America to hold the highest market share during the forecast period Startups in the US are receiving funds from various organizations to research AI technology, which finds applications in autonomous drones and other aerial vehicles. The countrys primary focus is to overcome reliability, safety, and autonomy challenges faced by industrial drones. Thus, companies have developed solutions that combine computer vision and deep learning algorithms to identify potential hazards and increase the endurance of industrial drones. Additionally, universities and research institutions in the US also have strong computer vision programs and are actively contributing to the development of the field. NVIDIA Corporation (US), Intel Corporation (US), Microsoft (US), IBM Corporation (US), Qualcomm Technologies Inc. (US), Advanced Micro Devices, Inc (US), Alphabet, Inc. (US), Amazon (US), Basler AG (Germany), Hailo (US), and Groq, Inc. (US). The other company profiles included in the scope are Sighthound, Inc. (US), Neurala, Inc. (US), Datagen (Israel), Graphcore (UK), Groopic (US), Ultraleap (US), Algolux (US), Athena Security (US), Snorkel AI (US), Vizseek (US), Robotic Vision Technologies (US), AMP Robotics (US), CureMetrix (US), and Creative Virtual (UK) are among the many players in the AI in computer vision market. Get 10% Free Customization on this Report: https://www.marketsandmarkets.com/requestCustomizationNew.asp?id=141658064 Browse Adjacent Market: Semiconductor and Electronics Market Research Reports &Consulting Related Reports: Machine Vision Market by Component (Hardware, Software), Deployment (General, Robotic Cells), Product (PC-based Machine Vision System, Smart Camera-based Machine Vision System), End-user Industry, Region – 2030 3D Machine Vision Market with COVID-19 impact Analysis by Offering (Hardware and Software), Product (PC-based and Smart Camera-based), Application, Vertical (Industrial and Non-Industrial) & Geography - Global Forecast till 2025 Computer Vision Market by Component (Hardware (Camera, Frame Grabber, Optics, Processor) and Software (Deep Learning and Traditional Software)), Product (PC Based and Smart Camera Based), Application, Vertical - Global Forecasts to 2023 Artificial Intelligence (Chipsets) Market by Technology (Machine learning, Natural Language Processing, Context Aware Computing, Computer Vision), Hardware (Processor, Memory, Network), End-User Industry, and region - Global Forecast to 2026 Industrial Control & Factory Automation Market by Component, Solution (SCADA, PLC, DCS, MES, Industrial Safety, PAM), Industry (Process Industry and Discrete Industry) and Region (North America, Europe, APAC, RoW) – Global Forecast to 2027 About MarketsandMarkets™ MarketsandMarkets™ is a blue ocean alternative in growth consulting and program management, leveraging a man-machine offering to drive supernormal growth for progressive organizations in the B2B space. We have the widest lens on emerging technologies, making us proficient in co-creating supernormal growth for clients. The B2B economy is witnessing the emergence of $25 trillion of new revenue streams that are substituting existing revenue streams in this decade alone. We work with clients on growth programs, helping them monetize this $25 trillion opportunity through our service lines - TAM Expansion, Go-to-Market (GTM) Strategy to Execution, Market Share Gain, Account Enablement, and Thought Leadership Marketing. Built on the 'GIVE Growth' principle, we work with several Forbes Global 2000 B2B companies - helping them stay relevant in a disruptive ecosystem. Our insights and strategies are molded by our industry experts, cutting-edge AI-powered Market Intelligence Cloud, and years of research. The KnowledgeStore™ (our Market Intelligence Cloud) integrates our research, facilitates an analysis of interconnections through a set of applications, helping clients look at the entire ecosystem and understand the revenue shifts happening in their industry. To find out more, visit www.MarketsandMarkets™.com or follow us on Twitter, LinkedIn and Facebook. Contact: Mr. Aashish MehraMarketsandMarkets™ INC. 630 Dundee RoadSuite 430Northbrook, IL 60062USA: +1-888-600-6441Email: [email protected]Visit Our Web Site: https://www.marketsandmarkets.com/Research Insight: https://www.marketsandmarkets.com/ResearchInsight/ai-in-computer-vision-market.aspContent Source: https://www.marketsandmarkets.com/PressReleases/ai-in-computer-vision.asp Logo: https://mma.prnewswire.com/media/660509/MarketsandMarkets_Logo.jpg SOURCE MarketsandMarkets According to MarketsandMarkets™, the Oil and Gas NDT and Inspection Market is projected to expand steadily from USD 4.06 billion in 2025 to USD 6.20... According to MarketsandMarkets™, the RTLS market in manufacturing & automotive is projected to grow from USD 1.19 billion in 2025 to USD 2.94 billion ... Computer & Electronics Computer Software Computer Software Artificial Intelligence Do not sell or share my personal information:

Images (1):

|

|||||

| Computer Vision Market Size Worth $85.92 Billion by 2032: | https://www.globenewswire.com/news-rele… | 1 | Dec 26, 2025 00:03 | active | |

Computer Vision Market Size Worth $85.92 Billion by 2032:Description: The global Computer Vision market is anticipated to grow from USD 15.19 billion to USD 85.92 billion in 10 years. The expanding utilization of computer... Content:

November 27, 2023 12:00 ET | Source: The Brainy Insights The Brainy Insights Newark, Nov. 27, 2023 (GLOBE NEWSWIRE) -- The Brainy Insights estimates that the USD 15.19 billion in 2022 global Computer Vision market will reach USD 85.92 billion by 2032. The adoption of computer vision for remote patient monitoring, telemedicine, and virtual healthcare visits is expected to grow, especially as healthcare systems seek ways to increase accessibility and reduce in-person interactions. Computer vision is increasingly used for precision agriculture, enabling the monitoring of crop health, automated harvesting, and efficient pesticide and fertilizer application, contributing to improved crop yields and sustainable farming practices. Furthermore, the rise of autonomous delivery robots and drones presents opportunities for computer vision to enhance navigation, obstacle detection, and delivery efficiency in the e-commerce and logistics sectors. Computer vision can enhance the educational experience with interactive and adaptive learning platforms, personalized tutoring, and student engagement analytics. Additionally, As AR and VR applications expand, computer vision plays a pivotal role in improving real-world tracking, object recognition, and natural interaction, creating opportunities in gaming, training, and virtual tourism. Moreover, computer vision can be employed for environmental monitoring, including wildlife conservation, pollution detection,and disaster response, contributing to the preservation of ecosystems. Besides, implementing smart city initiatives offers opportunities for computer vision in traffic management, public safety, and environmental monitoring, contributing to more efficient and sustainable urban living. Get more insights from the 230-page market research report @ https://www.thebrainyinsights.com/enquiry/sample-request/13807 Key Insight of the global Computer Vision market Asia Pacific is expected to witness the highest market growth over the forecast period. The Asia-Pacific region is undergoing quick economic growth, leading to increased consumer spending and business investment. This growth fuels the demand for innovative technologies like computer vision across various industries. Several nations in the Asia-Pacific region, such as China and India, are considered emerging markets. These markets are witnessing increased adoption of computer vision technologies for diverse applications. Additionally, governments in the region are supporting the development and deployment of AI and computer vision technologies through policies, funding, and regulatory frameworks. These initiatives create a favourable environment for market growth. The region's healthcare sector is also expanding, and computer vision plays an important function in diagnostics, medical imaging, and telemedicine. The increasing healthcare needs provide a substantial growth opportunity. Furthermore, precision agriculture is gaining traction in the Asia-Pacific region, with computer vision being used for crop monitoring, disease detection, and automation. This factor aligns with the region's agricultural needs. Besides, the region's universities and research institutions actively collaborate with industry players on computer vision research. This synergy between academia and industry has accelerated advancements in the field. In 2022, the hardware segment held the largest market share at 70.25% and a market revenue of 10.67 billion. The component segment is divided into hardware, software and service. In 2022, the hardware segment held the largest market share at 70.25% and a market revenue of 10.67 billion. In 2022, the PC-based computer vision system segment dominated the market with the largest share of 62.48% and revenue of 9.49 billion. The product type segment includes PC-based computer vision system and smart camera-based computer vision system. In 2022, the PC-based computer vision system segment dominated the market with the largest share of 62.48% and revenue of 9.49 billion. In 2022, the quality assurance & inspection segment dominated the market with the highest share of 28.31% and market revenue of 4.30 billion. The application segment is classified into 3D visualization & interactive 3D modelling, identification, measurement, positioning & guidance, predictive maintenance, and quality assurance & inspection. In 2022, the quality assurance & inspection segment dominated the market with the highest share of 28.31% and market revenue of 4.30 billion. In 2022, the industrial segment held the largest market share at 53.68% and a market revenue of 8.15 billion. The vertical segment is split into industrial and non-industrial. In 2022, the industrial segment held the largest market share at 53.68% and a market revenue of 8.15 billion. Advancement in market In October 2023: Cadence Design Systems, Inc. has successfully acquired Intrinsix Corporation. This strategic acquisition enriches Cadence with a team of highly proficient engineers specializing in security algorithms, mixed-signal, radio frequency and cutting-edge nodes. As a result, Cadence's capabilities in the system and IC design services are significantly bolstered. At the same time, its footprint in critical high-growth sectors, such as aerospace and defence, is substantially broadened. In September 2023: Matterport, Inc. has unveiled the latest iteration of intelligent digital twins, enriched with robust new features driven by the company's remarkable progress in AI and data science. Currently available in beta, customers can tap into various automated functions encompassing measurements, layouts, editing, and reporting, all derived from their digital twins. This automation represents a significant milestone, streamlining customer workflows by eliminating the necessity for manual measurements and reporting, thanks to the automatic processing of the vast troves of 3D data points captured within a Matterport digital twin. Custom Requirements can be requested for this report @ https://www.thebrainyinsights.com/enquiry/request-customization/13807 Market Dynamics Driver: Security and surveillance. Computer vision is the backbone of modern video surveillance systems. These systems employ cameras with computer vision algorithms to monitor and analyze the environment in real time. This technology enables a multitude of security functions. Computer vision can track objects, vehicles, or individuals as they move within the surveillance area, ensuring nothing goes unnoticed. These characteristics are particularly important for tracking suspicious activities. In parking facilities and at entry points, computer vision can recognize and log license plate information. This feature is invaluable for security and access control, allowing for the identification of authorized vehicles or the tracking of suspicious ones. Additionally, through the analysis of video feeds, computer vision systems can identify anomalies or unusual behaviour, such as loitering in restricted areas, unauthorized access, or objects left unattended. When such anomalies are detected, security personnel can be alerted in real-time. Furthermore, facial recognition is a prominent computer vision application in the security domain. This technology captures, analyzes, and matches faces against databases of known individuals. Restraint: Limited robustness. Computer vision systems are immensely powerful in various applications, but they do face challenges in complex and dynamic environments. One of the primary challenges is dealing with diverse lighting conditions. Computer vision systems rely on consistent lighting to detect and recognize objects accurately. Changes in lighting, such as strong shadows, reflections, or low light conditions, can make it difficult for the system to identify objects correctly. For example, in surveillance applications, shifts in lighting can obscure important details, making it challenging to track or identify individuals or objects effectively. Objects in the real world often exhibit variations in size, shape, colour, and texture. Computer vision standards should be trained on different variations to perform well in diverse scenarios. However, the presence of unexpected variations can pose a challenge. For instance, in manufacturing, the assembly line may produce products with slight variations in size or appearance, making it difficult for the system to identify and inspect them consistently. Furthermore, environmental factors, like rain, snow, fog, or dust, can disrupt computer vision systems. These factors can obstruct the camera's view and reduce the quality of the captured images. For autonomous vehicles, these environmental challenges can affect the vehicle's ability to detect obstacles and navigate safely. Also, many applications of computer vision require real-time processing. Ensuring a system can process and interpret visual data within strict time constraints can be challenging, especially in complex environments with rapidly changing conditions. Opportunity: Augmented and virtual reality (AR/VR). Augmented Reality and Virtual Reality applications have significantly expanded in various sectors, including gaming, education, training, and virtual tourism. Computer vision technology plays a central role in these applications, enabling real-world tracking and interaction. It is a key industry driver, facilitating immersive and interactive experiences. AR games like "Pokémon GO" overlay virtual details onto the real world, letting participants interact with digital objects and characters in their physical surroundings. Computer vision recognizes and tracks the environment, making these interactions possible. In VR gaming, computer vision can recognize hand and body movements, enabling gesture-based controls and enhancing player immersion. Players can reach out, grab objects, and perform actions in the virtual world, creating a more intuitive and engaging gaming experience. In addition, computer vision can map the player's surroundings in real-time, adapting the virtual environment to match the physical space. This factor ensures that virtual objects and characters interact realistically with the player's surroundings. Besides, AR apps provide interactive learning experiences where students can explore virtual objects and simulations overlaid on their textbooks or physical surroundings. Computer vision recognizes the target objects and enhances the educational content. VR simulations also can transport students to virtual environments, such as historical sites, the human body, or outer space. Computer vision ensures that students can interact with and explore these environments as if they were physically present. Challenge: Data annotation and quality. Annotating data is a labour-intensive process that requires human annotators to review each image or video frame and add descriptive labels or tags. The time required for annotation depends on the complexity of the data and the desired level of detail. For large datasets, the time investment can be significant, leading to delays in model development. Additionally, hiring and retaining skilled annotators can be costly. Annotators must be trained to understand the annotation guidelines and maintain a consistent labelling approach. Additionally, the scale of annotation projects, especially for extensive datasets, can result in substantial expenses related to labour and infrastructure. Furthermore, annotating data can be error-prone, as annotators may need to correct labelling or tagging images. This factor can lead to inaccuracies in the training data, which, in turn, affect the performance of computer vision models. Consistency and quality control are crucial to minimize errors, but they can be challenging to maintain across large annotation projects. Report Scope Have a question? Speak to Research Analyst @ https://www.thebrainyinsights.com/enquiry/speak-to-analyst/13807 Some of the major players operating in the global Computer Vision market are: • Baumer• Basler AG• Cognex Corporation• Cadence Design Systems, Inc.• CEVA Inc.• Intel Corporation• IBM• KEYENCE Corporation• Matterport, Inc.• MediaTek Inc.• Microsoft• National Instruments Corporation• NVIDIA• Omron Corporation• Qualcom• SAS Institute • Synopsys, Inc. • Teledyne Technologies Incorporated.• Texas Instruments Incorporated• Tordivel As Key Segments cover in the market: By Component • Hardware • Software• Service By Product Type • PC-Based Computer Vision System• Smart Camera-Based Computer Vision System By Application • 3D Visualization & Interactive 3D Modelling• Identification• Measurement• Positioning & Guidance• Predictive Maintenance• Quality Assurance & Inspection By Vertical • Industrial • Non-Industrial By Region • North America (U.S., Canada, Mexico) • Europe (Germany, France, the UK, Italy, Spain, Rest of Europe)• Asia-Pacific (China, Japan, India, Rest of APAC)• South America (Brazil and the Rest of South America)• The Middle East and Africa (UAE, South Africa, Rest of MEA) About the report: The market is analyzed based on value (USD Billion). All the segments have been analyzed worldwide, regional, and country basis. The study includes the analysis of more than 30 countries for each part. The report analyses driving factors, opportunities, restraints, and challenges to gain critical market insight. The study includes Porter's five forces model, attractiveness analysis, Product analysis, supply, and demand analysis, competitor position grid analysis, distribution, and marketing channels analysis. About The Brainy Insights: The Brainy Insights is a market research company, aimed at providing actionable insights through data analytics to companies to improve their business acumen. We have a robust forecasting and estimation model to meet the clients' objectives of high-quality output within a short span of time. We provide both customized (clients' specific) and syndicate reports. Our repository of syndicate reports is diverse across all the categories and sub-categories across domains. Our customized solutions are tailored to meet the clients' requirements whether they are looking to expand or planning to launch a new product in the global market. Contact Us Avinash DHead of Business DevelopmentPhone: +1-315-215-1633Email: sales@thebrainyinsights.com Web: www.thebrainyinsights

Images (1):

|

|||||

| AI and Computer Vision: A Comprehensive Guide from SaM Solutions | https://samsolutions.medium.com/ai-and-… | 0 | Dec 26, 2025 00:03 | active | |

AI and Computer Vision: A Comprehensive Guide from SaM SolutionsDescription: AI and Computer Vision: A Comprehensive Guide from SaM Solutions Imagine a factory camera catching a bad product before it even leaves the factory building. Or ... Content: |

|||||

| Artificial Intelligence (AI) in Computer Vision Market to | https://www.globenewswire.com/news-rele… | 1 | Dec 26, 2025 00:03 | active | |

Artificial Intelligence (AI) in Computer Vision Market toDescription: Westford USA, June 05, 2024 (GLOBE NEWSWIRE) -- SkyQuest projects that Artificial Intelligence (AI) in Computer Vision Market will attain a value of USD... Content:

June 05, 2024 09:00 ET | Source: SkyQuest Technology Consulting Pvt. Ltd. SkyQuest Technology Consulting Pvt. Ltd. Westford USA, June 05, 2024 (GLOBE NEWSWIRE) -- SkyQuest projects that Artificial Intelligence (AI) in Computer Vision Market will attain a value of USD 81.68 Billion by 2031, with a CAGR of 21.5% over the forecast period (2024-2031). AI computer vision revolutionizes industry 4.0 by enabling self-driven cars to understand visual data. When combined, artificial intelligence and computer vision empowers having a squadron of superpowered robots working in warehouse and logistics functions. Based on its observations, such contraptions can perceive, comprehend, and decide. As a result, the workflow becomes more streamlined, accurate, and productive, increasing profitability. Download a detailed overview: https://www.skyquestt.com/sample-request/ai-in-computer-vision-market Browse in-depth TOC on the " Artificial Intelligence (AI) in Computer Vision Market " Artificial Intelligence (AI) in Computer Vision Market Overview: The Dominant Stance of PC-Based Computer Vision Systems Is Made Possible by High Processing Power and Flexibility Systems for computer vision in computers based on PC are leading AI systems for computer vision on a global scale. They demonstrate a high level of adaptability and have high processing capabilities. One of the reasons computer-based systems own a significant share in the market for complex vision assignments is related to better GPU performance, an increase in the number of high-resolution images requiring processing as well as scalability demands. Urgent Need for Precise Diagnosis and Improved Patient Care Propels Healthcare Industry to Emerge as Fastest-Growing Sector As the healthcare industry is the leading global AI in computer vision market due to the urgent need for accurate diagnosis and enhanced patient care, it is at the forefront. Some of the reasons behind this dominance are the increased application of AI in medical imaging, the requirement for automated diagnostic tools as well as machine learning algorithms that boost diagnostic precision and operational effectiveness in healthcare. North America Dominant with its Favorable Government Measures Designed to Promote Use of Computer Vision The computer vision AI market in North America is anticipated to grow at the fastest rate during the projected period. The number could have been potentially increased by the province’s supportive government programmes aimed at promoting the usage of computer vision. For realistic tests and applications, the revolution brought computer vision and artificial intelligence into public health departments. The United States General Services Administration Artificial Intelligence Center of Excellence also assists entities through NLP, deep learning, machine vision, robotic process automation, smart process development and organisations across create AI applications. Request Free Customization of this report: https://www.skyquestt.com/speak-with-analyst/ai-in-computer-vision-market Artificial Intelligence (AI) in Computer Vision Market Insight Drivers: Restraints: Prominent Players in Global Artificial Intelligence (AI) in Computer Vision Market View report summary and Table of Contents (TOC): https://www.skyquestt.com/report/ai-in-computer-vision-market Key Questions Answered in Global Artificial Intelligence (AI) in Computer Vision Market Report This report provides the following insights: Related Reports: Global Artificial Intelligence Market Global Artificial Intelligence of Things (AIoT) Market Global Edge Artificial Intelligence (AI) Market Global Artificial Intelligence (AI) Hardware Market Global Artificial Intelligence (AI) in Banking, Financial Services, and Insurance (BFSI) Market About Us: SkyQuest is an IP focused Research and Investment Bank and Accelerator of Technology and assets. We provide access to technologies, markets and finance across sectors viz. Life Sciences, CleanTech, AgriTech, NanoTech and Information & Communication Technology. We work closely with innovators, inventors, innovation seekers, entrepreneurs, companies and investors alike in leveraging external sources of R&D. Moreover, we help them in optimizing the economic potential of their intellectual assets. Our experiences with innovation management and commercialization have expanded our reach across North America, Europe, ASEAN and Asia Pacific. Contact: Mr. Jagraj Singh Skyquest Technology 1 Apache Way, Westford, Massachusetts 01886 USA (+1) 351-333-4748 Email: sales@skyquestt.com Visit Our Website: https://www.skyquestt.com/

Images (1):

|

|||||

| Computer Vision: A Comprehensive Guide to Machine Perception | https://medium.com/@thecodeyatri/comput… | 0 | Dec 26, 2025 00:03 | active | |

Computer Vision: A Comprehensive Guide to Machine PerceptionDescription: Computer Vision: A Comprehensive Guide to Machine Perception Computer vision represents one of the most transformative fields in artificial intelligence, enabli... Content: |

|||||

| AI and Computer Vision: The Future of Visual Intelligence | https://samsolutions.medium.com/ai-and-… | 0 | Dec 26, 2025 00:03 | active | |

AI and Computer Vision: The Future of Visual IntelligenceDescription: AI and Computer Vision: The Future of Visual Intelligence Machines are watching us and the world around, it’s true. Computer vision has moved from research la... Content: |

|||||

| Computer Vision | https://tareqshahalam.medium.com/comput… | 0 | Dec 26, 2025 00:03 | active | |

Computer VisionURL: https://tareqshahalam.medium.com/computer-vision-2c32aaeea29d Description: Computer Vision Computer Vision is a field of computer science that enables machines to ‘see’ and understand the visual world. It involves to teach computer... Content: |

|||||

| Computer Vision: 2025 Trends & Insights | https://medium.com/@API4AI/computer-vis… | 0 | Dec 26, 2025 00:03 | active | |

Computer Vision: 2025 Trends & InsightsURL: https://medium.com/@API4AI/computer-vision-milestones-trends-future-insights-2d75bd6af985 Description: A clear guide to computer vision’s key breakthroughs, 2025 trends, and smart adoption strategies using ready-to-use APIs or custom AI tools. Content: |

|||||

| Robots Finally Get Active, Human-Like Vision - Hackster.io | https://www.hackster.io/news/robots-fin… | 1 | Dec 26, 2025 00:03 | active | |

Robots Finally Get Active, Human-Like Vision - Hackster.ioURL: https://www.hackster.io/news/robots-finally-get-active-human-like-vision-93ef3aff167c Description: EyeVLA is a robotic “eyeball” that actively moves and zooms to gather clearer visuals, giving robots more human-like, flexible perception. Content:

Please ensure that JavaScript is enabled in your browser to view this page. The way that a computer analyzes a visual scene is vastly different from how humans process visual information. Modern computer vision algorithms are typically fed a static image, which they then analyze to identify certain types of objects, make a classification, or some other related task. Humans understand visual data in a far different, and much more interactive, way. We don’t just take one quick look, then draw all of our conclusions about a scene. Rather, we look around, zoom in on certain areas, and focus on items of interest to us. A group led by researchers at the Shanghai Jiao Tong University realized that by adopting a similar approach, artificial systems might be able to improve their performance. For this reason, they developed what they call EyeVLA, a robotic eyeball for active visual perception. Using this system, robots can take proactive measures that help them in better understanding — and interacting with — their surroundings. In most embodied AI systems, cameras are mounted in fixed positions. These setups work well for acquiring broad overviews of an environment but struggle to capture fine-grained details without expensive, high-resolution sensors. EyeVLA directly tackles this limitation by mimicking the mobility and focusing ability of the human eye. Built on a simple 2D pan-tilt mount paired with a zoomable camera, the system can rotate, tilt, and adjust its focal length to gather more useful visual information on demand. The team adapted the Qwen2.5-VL (7B) vision-language model and trained it, via reinforcement learning, to interpret both visual scenes and natural-language instructions. Instead of passively analyzing whatever image it receives, EyeVLA predicts a sequence of “action tokens” — discrete commands that correspond to camera movements. These tokens let the model plan viewpoint adjustments in much the same way that language models plan the next word in a sentence. To guide these decisions, the researchers integrated 2D bounding-box information into the model’s reasoning chain. This allows EyeVLA to identify areas of potential interest and then zoom in to collect higher-quality data. Their hierarchical token-based encoding scheme also compresses complex camera motions into a small number of tokens, making the system efficient enough to run within limited computational budgets. Experiments in indoor environments showed that EyeVLA can actively acquire clearer and more accurate visual observations than fixed RGB-D camera systems. Even more impressively, the model learned these capabilities using only about 500 real-world training samples, thanks to reinforcement learning and pseudo-labeled data expansions. By combining wide-area awareness with the ability to zoom in on fine details, EyeVLA gives embodied robots a more human-like awareness of their surroundings. The researchers envision future applications for this technology in areas such as infrastructure inspection, warehouse automation, household robotics, and environmental monitoring. As robotic systems take on increasingly complex tasks, technologies like EyeVLA may become essential for enabling them to perceive — and interact with — the world as flexibly as humans do. Hackster.io, an Avnet Community © 2025

Images (1):

|

|||||

| What is Computer Vision? Explained Simply | https://pub.aimind.so/what-is-computer-… | 0 | Dec 26, 2025 00:03 | active | |

What is Computer Vision? Explained SimplyDescription: Uncover the transformative power of AI Computer Vision in our comprehensive guide. Explore its history, applications, future, and ethical implications. Content: |

|||||

| Computer Vision in 2025: From Fundamentals to Ethical Applications | https://medium.com/@angelosorte1/comput… | 0 | Dec 26, 2025 00:03 | active | |

Computer Vision in 2025: From Fundamentals to Ethical ApplicationsDescription: Computer Vision in 2025: From Fundamentals to Ethical Applications Introduction: What is Computer Vision? Computer Vision (CV) is a branch of artificial intelli... Content: |

|||||

| MIT Teaches Soft Robots Body Awareness Through AI And Vision | https://www.forbes.com/sites/jenniferki… | 0 | Dec 26, 2025 00:03 | active | |

MIT Teaches Soft Robots Body Awareness Through AI And VisionDescription: MIT’s CSAIL researchers developed a system that lets soft robots learn how their bodies move using only vision and AI with no sensors, models or manual progra... Content: |

|||||

| Computer Vision: The Eyes of Artificial Intelligence | https://medium.com/@kashifabdullah581/c… | 0 | Dec 26, 2025 00:03 | active | |

Computer Vision: The Eyes of Artificial IntelligenceDescription: Computer vision is like giving computers eyes and a brain to understand photos and videos. Just like you can look at a picture and recognize a cat, computer vis... Content: |

|||||

| Teaching Robots to See: Building a Computer Vision System for … | https://medium.com/@beyondbytes2/teachi… | 0 | Dec 26, 2025 00:03 | active | |

Teaching Robots to See: Building a Computer Vision System for Warehouse AutomationDescription: Computer vision is revolutionizing warehouse automation, enabling robots to navigate complex environments and perform precise operations with unprecedented accu... Content: |

|||||

| Florida is deploying robot rabbit lures that cost $4,000 apiece … | https://biztoc.com/x/ead6c6333c451586?r… | 0 | Dec 25, 2025 08:00 | active | |

Florida is deploying robot rabbit lures that cost $4,000 apiece in a desperate push to solve the Everglades’ python problemURL: https://biztoc.com/x/ead6c6333c451586?ref=ff Description: They look, move and even smell like the kind of furry Everglades marsh rabbit a Burmese python would love to eat. But these bunnies are robots meant to lure… Content: |

|||||

| Amazon acelera la era de los robots: 600.000 empleos en … | https://www.elcolombiano.com/inicio/emp… | 1 | Dec 25, 2025 08:00 | active | |

Amazon acelera la era de los robots: 600.000 empleos en riesgoURL: https://www.elcolombiano.com/inicio/empleos-en-riesgo-inteligencia-artificial-HC30541152 Description: Una serie de documentos revelada por The New York Times y The Verge indican que Amazon planea reemplazar 600.000 trabajadores con sistemas robóticos y... Content:

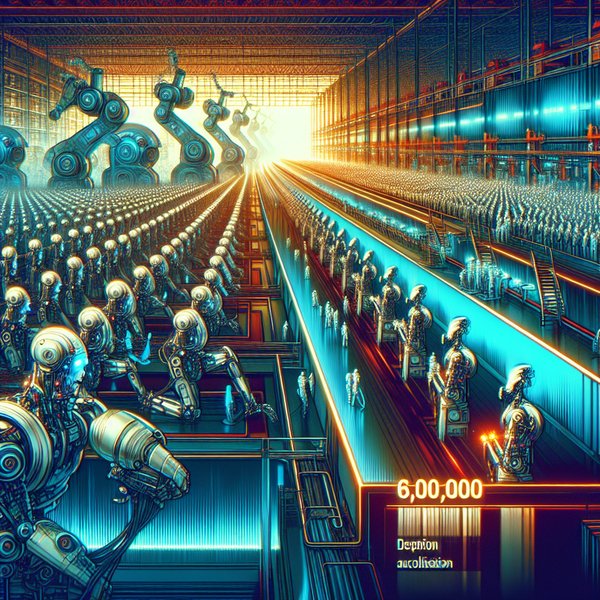

3 y 4 3 y 4 0 y 2 0 y 2 1 y 8 1 y 8 5 y 7 5 y 7 no no no no 6 y 9 6 y 9 Un informe filtrado sobre los planes de Amazon para reemplazar a cientos de miles de trabajadores con robots confirma que la inteligencia artificial (IA) está transformando el empleo más rápido de lo previsto. Una serie de documentos revelada por The New York Times y The Verge indican que Amazon planea reemplazar 600.000 trabajadores con sistemas robóticos y de inteligencia artificial, automatizando hasta el 75% de sus operaciones antes de 2033. Solo en Estados Unidos, la empresa eliminarÃa más de 160.000 puestos de trabajo hacia 2027. Aunque la compañÃa insiste en que continúa contratando, los analistas coinciden en que el caso de Amazon refleja un fenómeno global: la automatización avanza a un ritmo que supera la capacidad de adaptación de empresas y empleados. âEl caso de Amazon es solo la punta del iceberg. La IA no solo sustituirá empleos: está reconfigurando la forma en que trabajamos y qué habilidades seguirán siendo esencialesâ, explicó un vocero del equipo editorial de LiveCareer, entidad que analiza las tendencias laborales derivadas del avance tecnológico. Según el nuevo estudio de LiveCareer, los diez trabajos con mayor riesgo de automatización a corto plazo son aquellos con tareas repetitivas, estructuradas o fácilmente digitalizables. El informe también sugiere rutas de transición profesional para quienes deseen adaptarse. La lista es extensa: Empleados de introducción de datos: automatizados por sistemas de reconocimiento óptico y machine learning. La ruta sugerida es el análisis y gestión de datos (Excel avanzado, SQL, Python). Teleoperadores sustituidos por IA de voz y chatbots conversacionales, para quienes se aconseja el marketing digital, ventas consultivas, gestión de CRM. Agentes de atención al cliente: desplazados por chatbots 24/7. Aquà se sugieren experiencia del cliente (CX) y soporte técnico avanzado. Cajeros de tienda reemplazados por cajas automáticas y tiendas sin personal, con ruta escape en la gestión de retail, operaciones y logÃstica. Correctores y editores de texto superados por herramientas como Grammarly o ChatGPT, para lo que se sugieren estrategia de contenidos, comunicación y SEO. Asistentes legales afectados por la revisión documental automatizada quienes deben capacitarse en legal tech, compliance y gestión de proyectos legales. Los contables reemplazados por software de IA para conciliaciones y reportes, deben apostar por análisis financiero y planeación estratégica. También figuran los trabajadores de restaurantes sustituidos por robots de cocina y autoservicio, quienes deben inclinarse por la gestión gastronómica y la tecnologÃa alimentaria. Mozos de almacén desplazados por robots logÃsticos e inventarios inteligentes, van a migrar a las supervisión logÃstica y robótica aplicada, y los analistas de mercado junior afectados por la automatización del análisis de datos se enrutarán a la data storytelling y la estrategia comercial. El estudio de LiveCareer advierte que la IA no destruirá el empleo humano, sino que lo transformará profundamente. Según sus datos, el 41% de las empresas espera reducir personal antes de 2030, pero se crearán cerca de 170 millones de nuevos empleos, lo que dejarÃa un saldo neto positivo de 78 millones de puestos. Los sectores administrativos, de ventas y soporte técnico serán los más afectados, pero surgirán nuevas oportunidades en análisis de datos, tecnologÃa, educación y creatividad. âLos robots pueden ejecutar tareas, pero no pueden liderar equipos, inspirar o tomar decisiones éticas. La nueva ventaja competitiva será humana: pensamiento crÃtico, empatÃa y adaptabilidadâ, señaló LiveCareer. El informe recomienda a los trabajadores fortalecer sus competencias digitales y analÃticas, dominando herramientas como Power BI, Python o plataformas de IA generativa. También sugiere potenciar habilidades blandas como liderazgo, comunicación, resolución de problemas y toma de decisiones. Asimismo, invita a actualizar currÃculums y perfiles profesionales para resaltar la capacidad de adaptación y el aprendizaje continuo, factores clave en un mercado laboral en transformación. Las empresas, por su parte, deberÃan concebir la inteligencia artificial como una aliada estratégica, no solo como una herramienta de reducción de costos, sino como un medio para aumentar la productividad y abrir nuevos modelos de negocio. La alerta de LiveCareer coincide con las declaraciones del Nobel de EconomÃa Daron Acemoglu, quien advirtió que si Amazon logra automatizar de forma rentable, âotras empresas seguirán el mismo caminoâ, convirtiendo a uno de los mayores empleadores del planeta en un posible destructor neto de empleos. El análisis se nutre de datos de la Organización Internacional del Trabajo (OIT), CNN Business y CIO, y consolida una conclusión contundente: la inteligencia artificial marcará un antes y un después en el mundo laboral, y quienes se preparen desde ahora serán los que lideren la economÃa digital de la próxima década. Temas recomendados La contienda se resolvió por una pequeña diferencia y el conteo sufrió varias interrupciones. Aquà los detalles. Expusieron fallas mecánicas, exceso de velocidad y microsueño en bus con estudiantes accidentado en Remedios, Antioquia, que dejó 17 vÃctimas mortales. Petro quedó penúltimo en ranking regional de favorabilidad presidencial, reflejando una imagen negativa, según medición de CB Consultora Opinión Pública.

Images (1):

|

|||||

| Florida is deploying robot rabbit lures that cost $4,000 apiece … | https://fortune.com/2025/08/28/florida-… | 0 | Dec 25, 2025 08:00 | active | |

Florida is deploying robot rabbit lures that cost $4,000 apiece in a desperate push to solve the Everglades’ python problemURL: https://fortune.com/2025/08/28/florida-pythons-everglades-robot-rabbits/ Description: “Removing them is fairly simple. It's detection. We're having a really hard time finding them,” said Mike Kirkland of the South Florida Water Management Dis... Content: |

|||||

| 🤖 Restaurant Service Robots: The Future of Hospitality 🍽️ | https://medium.com/@karanshvnkr/restaur… | 0 | Dec 25, 2025 08:00 | active | |

🤖 Restaurant Service Robots: The Future of Hospitality 🍽️Description: Imagine walking into a restaurant and being greeted by a friendly robot that takes your order, delivers your food, and even guides you to your table — all wit... Content: |

|||||

| 15 TikTok Videos About 'Clankers', a New Slur for Robots | https://gizmodo.com/15-tiktok-videos-ab… | 1 | Dec 25, 2025 08:00 | active | |

15 TikTok Videos About 'Clankers', a New Slur for RobotsURL: https://gizmodo.com/15-tiktok-videos-about-clankers-a-new-slur-for-robots-2000638817 Description: "I am not robophobic. I have an Alexa at home." Content:

Reading time 3 minutes Terms like “social media,” “podcast,” and “internet” emerged years ago as ways to talk about the latest advancements in the world of technology. And over the past month, we’ve seen some new terms popping up in the world of tech, from clanker to slopper, even if they seem to be mostly tongue-in-cheek at this point. What’s a clanker? It’s a derogatory word for a robot, a term coined in 1920 for a Czech play about dangerous mechanical men. And given the fact that humanoid robots are still pretty rare in everyday life, the term clanker has emerged as a way to joke about a future where robots face discrimination in jobs and relationships. That’s what the folks of TikTok have been doing with some frequency since the word started to spread widely online in early July. As io9 reported Monday, clanker as a slur actually originates from the Star Wars universe, starting with the 2005 video game Republic Commando and becoming more popular with the Clone Wars animated series in 2008. But the term has taken off recently as a way to joke about our uneasiness with new technology in 2025. Some of the videos currently circulating on social media are just directed at robots that show up in daily life already, like the bots that are sometimes cleaning in supermarkets. But other videos imagine what the future will look like, placing the viewer in an era, maybe 20 or 50 years from now, when robots will presumably be much more common. The jokes often use stereotypes of the 20th century around racial integration, mimicking the bigoted responses white people had reacting to civil rights advancements in the U.S. and repurposing them for this version of a future where humans are uncomfortable with a robotic other. Obviously, we don’t know how common humanoid robots will be in five, 10, or 20 years. Elon Musk has promised “billions” of robots will be sold around the globe within your lifetime. And while Musk is often far too, let’s say, optimistic about his tech timelines, it seems perfectly reasonable that we will have more humanoid robots walking around in the near future. For his part, Musk has only been showing off teleoperated robots that are closer to a magic trick than visions of the future. But technological change can be scary. And it’s interesting to see how content creators channel those fears by imagining a new future where robots are oppressed—something that’s incredibly common in science fiction, even before the word clanker was coined. Whatever you think of the term (and there are some people who are uncomfortable with it as coded racism rather than a comment on racism), it’s everywhere on TikTok right now. good for nothing cl***ers #groopski #robophobic #futuretech #humanityfirst ♬ Bell Sound/Temple/Gone/About 10 minutes(846892) – yulu-ism project #fyp ♬ original sound – baggyclothesfromjapan Robophobia running rampant in clanker society #robot #fyp #fypage #robophobia #ai #police #cops #robo ♬ Beethoven’s “Moonlight”(871109) – 平松誠 #fyp #pov #robot #skit ♬ Bell Sound/Temple/Gone/About 10 minutes(846892) – yulu-ism project Sorry I just thought you were one of the good ones 🤖❌ #clanker #ai #fypシ #viral #fyp ♬ Bell Sound/Temple/Gone/About 10 minutes(846892) – yulu-ism project All these new gens bro💔🥀 #clanker#ai#robot#clonewars #starwars#clone#jangofett #starwarsfan #obiwan#anakin ♬ Classic classical gymnopedie solo piano(1034554) – Lyrebirds music How would pissed would you get at a robot ump? #baseball #comedy #clanker #pov ♬ original sound – LucasRoach15 Clanker #clanker #clankermeme #robophobic #robot #fyp ♬ original sound – TrendsOnline – TrendsOnline Asked ChatGPT if it liked this video it said yeah ♬ Rust – Black Label Society #clanker #fyp #fypシ #relatable #funny #trending #blowthisup ♬ original sound – 👩🏼🎤 Robophobia running rampant in clanker society #robot #robophobia #fyp #fypage #ai #clanker #robo ♬ Dust Collector – ybg lucas ♬ original sound – Conner Esche These clankers am I right #skit #meme #edit #clanker ♬ original sound – coopermitcchell #pov : You’re coming out to your parents in 2050 #ai ♬ original sound – bjcalvillo Clanker #clanker #clankermeme #robophobic #robot #fyp #robots ♬ original sound – TrendsOnline – TrendsOnline Every new era sees an expansion of the tech lexicon. That’s just how the march of time works. And it’s unclear whether clankers will have any staying power beyond the summer of 2025. Another term, sloppers, has seen a similar rise, a term for people who use generative artificial intelligence for everything. There’s just no predicting what new words are going to stick. After all, the internet was almost called the catenet. Language works in really funny ways. Explore more on these topics Share this story Subscribe and interact with our community, get up to date with our customised Newsletters and much more. Gadgets gifts are the best gifts to get friends and family. We were asked not to write this review for the InnAIO T10, so naturally, we wrote this review. The McDonald's Christmas AI monstrosity has left people desperate for something to like. Will anything online be real next year? In 2025, if you wanted to do layoffs, AI was a great option for pinning the blame. U.S. electricity consumption is growing for the first time in a decade due to AI, so why are we stunting renewable energy development? ©2025 GIZMODO USA LLC. All rights reserved.

Images (1):

|

|||||

| Clearing Up Double Vision in Robots - Hackster.io | https://www.hackster.io/news/clearing-u… | 1 | Dec 25, 2025 08:00 | active | |

Clearing Up Double Vision in Robots - Hackster.ioURL: https://www.hackster.io/news/clearing-up-double-vision-in-robots-9e27a72b1889 Description: Caltech's VPEngine is a framework that makes robots faster and more efficient by cutting out redundant visual processing tasks. Content:

Please ensure that JavaScript is enabled in your browser to view this page. In the world of robotics, visual perception is not a single task. Rather, it is composed of a number of subtasks, ranging from feature extraction to image segmentation, depth estimation, and object detection. Each of these subtasks typically executes in isolation, after which the individual results are merged together to contribute to a robot’s overall understanding of its environment. This arrangement gets the job done, but it is not especially efficient. Many of the underlying machine learning models need to do some of the same steps — like feature extraction — before they move on to their task-specific components. That not only wastes time, but for robots running on battery power, it also limits the time they can operate between charges. A group of researchers at the California Institute of Technology came up with a clever solution to this problem that they call the Visual Perception Engine (VPEngine). It is a modular framework that was created to enable efficient GPU usage for visual multitasking while maintaining extensibility and developer accessibility. VPEngine leverages a shared backbone and parallelization to eliminate unnecessary GPU-CPU memory transfers and other computational redundancies. At the core of VPEngine is a foundation model — in their implementation, DINOv2 — that extracts rich visual features from images. Instead of running multiple perception models in sequence, each repeating the same feature extraction process, VPEngine computes those features once and shares them across multiple task-specific “head” models. These head models are lightweight and specialize in functions such as depth estimation, semantic segmentation, or object detection. The team designed the framework with several key requirements in mind: fast inference for quick responses, predictable memory usage for reliable long-term deployment, flexibility for different robotic applications, and dynamic task prioritization. The last of these is particularly important, as robots often need to shift their focus depending on context — for instance, prioritizing obstacle avoidance in cluttered environments or focusing on semantic understanding when interacting with humans. VPEngine achieves much of its efficiency by making heavy use of NVIDIA’s CUDA Multi-Process Service. This allows the separate task heads to run in parallel, ensuring high GPU utilization while avoiding bottlenecks. The researchers also built custom inter-process communication tools so that GPU memory could be shared directly between processes without costly transfers. Each module runs independently, meaning that a failure in one perception task will not bring down the entire system, which is an important consideration for safety and reliability. On the NVIDIA Jetson Orin AGX platform, the team achieved real-time performance at 50 Hz or greater with TensorRT-optimized models. Compared to traditional sequential execution, VPEngine delivered up to a threefold speedup while maintaining a constant memory footprint. Beyond performance, the framework is also designed to be developer-friendly. Written in Python with C++ bindings for ROS2, it is open source and highly modular, enabling rapid prototyping and customization for a wide variety of robotic platforms. By cutting out redundant computation and enabling smarter multitasking, the VPEngine framework could help robots become faster, more power-efficient, and ultimately more capable in dynamic environments. Hackster.io, an Avnet Community © 2025

Images (1):

|

|||||

| GitHub - ashishjsharda/humanoid-os: Open-source operating system for humanoid robots. Real-time … | https://github.com/ashishjsharda/humano… | 1 | Dec 25, 2025 08:00 | active | |

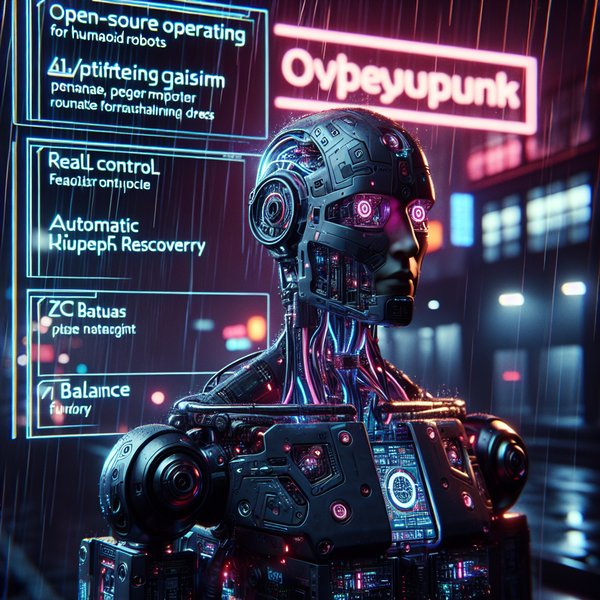

GitHub - ashishjsharda/humanoid-os: Open-source operating system for humanoid robots. Real-time control, 7 gaits, automatic push recovery, ZMP balance. MIT licensed.URL: https://github.com/ashishjsharda/humanoid-os Description: Open-source operating system for humanoid robots. Real-time control, 7 gaits, automatic push recovery, ZMP balance. MIT licensed. - ashishjsharda/humanoid-os Content:

We read every piece of feedback, and take your input very seriously. To see all available qualifiers, see our documentation. Open-source operating system for humanoid robots. Real-time control, 7 gaits, automatic push recovery, ZMP balance. MIT licensed. There was an error while loading. Please reload this page. There was an error while loading. Please reload this page. Open-source operating system for humanoid robots. Validation test of the Zero-G Kinematics Engine and Joint Control Loop. HumanoidOS provides a complete software stack for bipedal humanoid robots - from real-time control to autonomous locomotion. Built for researchers, hobbyists, and companies who need a solid foundation without reinventing the wheel. Contributions welcome! Open an issue or submit a PR. MIT License - see LICENSE Built after working on robotics projects at Apple, Formant, and various startups. Got tired of rebuilding the same control systems over and over, so decided to open-source a solid foundation. Open-source operating system for humanoid robots. Real-time control, 7 gaits, automatic push recovery, ZMP balance. MIT licensed. There was an error while loading. Please reload this page. There was an error while loading. Please reload this page.

Images (1):

|

|||||

| Hugging Face Launches Reachy Mini Robots for Human-Robot Interaction - … | https://www.infoq.com/news/2025/07/hugg… | 1 | Dec 25, 2025 08:00 | active | |

Hugging Face Launches Reachy Mini Robots for Human-Robot Interaction - InfoQURL: https://www.infoq.com/news/2025/07/hugging-face-reachy/ Description: Hugging Face has launched its Reachy Mini robots, now available for order. Designed for AI developers, researchers, and enthusiasts, the robots offer an exciting opportunity to experiment with human-r Content:

A monthly overview of things you need to know as an architect or aspiring architect. View an example We protect your privacy. Live Webinar and Q&A: A Reference Architecture for Building Trustworthy Agentic AI Systems (Jan 22, 2026) Save Your Seat Facilitating the Spread of Knowledge and Innovation in Professional Software Development Unlock the full InfoQ experience by logging in! Stay updated with your favorite authors and topics, engage with content, and download exclusive resources. Vivek Yadav, an engineering manager from Stripe, shares his experience in building a testing system based on multi-year worth of data. He shares insights into why Apache Spark was the choice for creating such a system and how it fits in the "traditional" engineering practices. As AI evolves from tool to collaborator, architects must shift from manual design to meta-design. This article introduces the "Three Loops" framework (In, On, Out) to help navigate this transition. It explores how to balance oversight with delegation, mitigate risks like skill atrophy, and design the governance structures that keep AI-augmented systems safe and aligned with human intent. Jade Abbott discusses the shift from massive, resource-heavy models to "Little LMs" that prioritize efficiency and cultural sustainability. She explains how techniques like LoRA, quantization, and GRPO allow for high performance with less compute. By sharing the "Ubuntu Punk" philosophy, she shares how to move beyond extractive data practices toward human-centric, sustainable AI systems. Peter Hunter & Elena Stojmilova share Open GI's journey from a slow, legacy monolith to a cloud-native SaaS platform. They detail how adopting Team Topologies and a decentralized architectural approach empowered teams. Key practices discussed include utilizing Domain-Driven Design to create a Context Map, implementing the Advice Process with Architectural Principles, and more. Lesley Cordero discusses platform engineering as a sociotechnical solution for scaling organizations. She explains the CALMS framework, the "pendulum of tension" between reliability and velocity, and how to transition from reactive to proactive leadership. By focusing on communal learning and distributed power, she shares how to build resilient systems without sacrificing human well-being. Go from AI demos to real engineering impact. Learn to embed LLMs, govern & scale securely. SOLD OUT! Join Luca Mezzalira for this 5-week online cohort. Master socio-technical architecture leadership. Save your spot. Learn what works in AI, architecture, data, security & FinTech. Early Bird ends Jan 13. Learn how leading engineering teams run AI in production—reliably, securely, and at scale. Early Bird ends Jan 13. InfoQ Homepage News Hugging Face Launches Reachy Mini Robots for Human-Robot Interaction This item in japanese Jul 15, 2025 2 min read by Daniel Dominguez Hugging Face has launched its Reachy Mini robots, now available for order. Designed for AI developers, researchers, and enthusiasts, the robots offer an exciting opportunity to experiment with human-robot interaction and AI applications. The Reachy Mini is compact, measuring 11 inches in height and weighing just 3.3 pounds. It comes as a kit that users can assemble themselves, fostering a deeper understanding of the robot’s mechanics. The robot features motorized head and body rotations, animated antennas for expressiveness, and multimodal sensing capabilities, including a camera, microphones, and speakers. These features enable rich AI-powered audio-visual interactions, making Reachy Mini suitable for a wide range of AI development and research tasks. Reachy Mini is fully programmable in Python, with future support for JavaScript and Scratch. The robot integrates with the Hugging Face Hub, which gives users access to over 1.7 million AI models and more than 400,000 datasets. This integration allows users to build, test, and deploy custom AI applications on the robot, making it a versatile tool for AI development. Both versions of Reachy Mini offer a range of capabilities, but the Wireless version includes onboard computing, wireless connectivity, and a battery, while the Lite version requires an external computing source. Regardless of the version, Reachy Mini is designed for accessibility and ease of use, making it ideal for AI enthusiasts, students, and researchers of all skill levels. Hugging Face’s approach to Reachy Mini aligns with its commitment to open-source technology. The robot’s hardware, software, and simulation environments are all open-source, which means that users can extend, modify, and share their own robot behaviors. The community-driven approach encourages innovation and collaboration, with users able to contribute to the growing library of robot behaviors and features. The community feedback reflects enthusiasm, curiosity, and constructive critique, with a focus on its affordability, open-source nature, and potential for AI and robotics development. System design & AI architect Marcel Butucea commented: Reachy Mini robot ships as a DIY kit & integrates w/ their AI model hub! Could this open-source approach, like Linux for robots, democratize robotics dev? Meanwhile Clement Delangue, CEO of Hugging Face posted: Everyone will be able to build all sorts of apps thanks to the integrations with Lerobot & Hugging Face. The Reachy Mini Lite is expected to begin shipping in late summer 2025, with the Wireless version rolling out in batches later in the year. Hugging Face is focused on getting the robots into the hands of users quickly to gather feedback and continuously improve the product. Snowplow enables digital-first companies to turn behavioral data into fuel for real-time advanced analytics, predictive modeling, hyper-personalization, and customer-facing AI agent context. Learn More. A round-up of last week’s content on InfoQ sent out every Tuesday. Join a community of over 250,000 senior developers. View an example We protect your privacy. A round-up of last week’s content on InfoQ sent out every Tuesday. Join a community of over 250,000 senior developers. View an example We protect your privacy. Reliability rules have changed. At QCon London 2026, unlearn legacy patterns and get the blueprints from senior engineers scaling production AI today. Join senior peers from high-scale orgs as they share how they are: InfoQ.com and all content copyright © 2006-2025 C4Media Inc. Privacy Notice, Terms And Conditions, Cookie Policy

Images (1):

|

|||||

| cTrader Introduces Native Python Supporting More Algo Trading Participants | https://financefeeds.com/ctrader-introd… | 0 | Dec 25, 2025 08:00 | active | |

cTrader Introduces Native Python Supporting More Algo Trading ParticipantsURL: https://financefeeds.com/ctrader-introduces-native-python-supporting-more-algo-trading-participants/ Description: Spotware, the developer of the cTrader multi-asset trading platform has launched an essential update with the introduction of cTrader Windows version 5.4, Content: |

|||||

| GitHub - OpenMind/OM1: Modular AI runtime for robots | https://github.com/OpenMind/OM1 | 1 | Dec 25, 2025 08:00 | active | |

GitHub - OpenMind/OM1: Modular AI runtime for robotsURL: https://github.com/OpenMind/OM1 Description: Modular AI runtime for robots. Contribute to OpenMind/OM1 development by creating an account on GitHub. Content:

We read every piece of feedback, and take your input very seriously. To see all available qualifiers, see our documentation. Modular AI runtime for robots There was an error while loading. Please reload this page. There was an error while loading. Please reload this page. Technical Paper | Documentation | X | Discord OpenMind's OM1 is a modular AI runtime that empowers developers to create and deploy multimodal AI agents across digital environments and physical robots, including Humanoids, Phone Apps, websites, Quadrupeds, and educational robots such as TurtleBot 4. OM1 agents can process diverse inputs like web data, social media, camera feeds, and LIDAR, while enabling physical actions including motion, autonomous navigation, and natural conversations. The goal of OM1 is to make it easy to create highly capable human-focused robots, that are easy to upgrade and (re)configure to accommodate different physical form factors. To get started with OM1, let's run the Spot agent. Spot uses your webcam to capture and label objects. These text captions are then sent to the LLM, which returns movement, speech and face action commands. These commands are displayed on WebSim along with basic timing and other debugging information. You will need the uv package manager. For MacOS For Linux Obtain your API Key at OpenMind Portal. Copy it to config/spot.json5, replacing the openmind_free placeholder. Or, cp env.example .env and add your key to the .env. Run After launching OM1, the Spot agent will interact with you and perform (simulated) actions. For more help connecting OM1 to your robot hardware, see getting started. Note: This is just an example agent configuration. If you want to interact with the agent and see how it works, make sure ASR and TTS are configured in spot.json5. OM1 assumes that robot hardware provides a high-level SDK that accepts elemental movement and action commands such as backflip, run, gently pick up the red apple, move(0.37, 0, 0), and smile. An example is provided in src/actions/move/connector/ros2.py: If your robot hardware does not yet provide a suitable HAL (hardware abstraction layer), traditional robotics approaches such as RL (reinforcement learning) in concert with suitable simulation environments (Unity, Gazebo), sensors (such as hand mounted ZED depth cameras), and custom VLAs will be needed for you to create one. It is further assumed that your HAL accepts motion trajectories, provides battery and thermal management/monitoring, and calibrates and tunes sensors such as IMUs, LIDARs, and magnetometers. OM1 can interface with your HAL via USB, serial, ROS2, CycloneDDS, Zenoh, or websockets. For an example of an advanced humanoid HAL, please see Unitree's C++ SDK. Frequently, a HAL, especially ROS2 code, will be dockerized and can then interface with OM1 through DDS middleware or websockets. OM1 is developed on: OM1 should run on other platforms (such as Windows) and microcontrollers such as the Raspberry Pi 5 16GB. We're excited to introduce full autonomy mode, where four services work together in a loop without manual intervention: From research to real-world autonomy, a platform that learns, moves, and builds with you. We'll shortly be releasing the BOM and details on DIY for it. Stay tuned! Clone the following repos - To start all services, run the following commands: Setup the API key For Bash: vim ~/.bashrc or ~/.bash_profile. For Zsh: vim ~/.zshrc. Add Update the docker-compose file. Replace "unitree_go2_autonomy_advance" with the agent you want to run. More detailed documentation can be accessed at docs.openmind.org. Please make sure to read the Contributing Guide before making a pull request. This project is licensed under the terms of the MIT License, which is a permissive free software license that allows users to freely use, modify, and distribute the software. The MIT License is a widely used and well-established license that is known for its simplicity and flexibility. By using the MIT License, this project aims to encourage collaboration, modification, and distribution of the software. Modular AI runtime for robots There was an error while loading. Please reload this page. There was an error while loading. Please reload this page. There was an error while loading. Please reload this page. There was an error while loading. Please reload this page.

Images (1):

|

|||||

| Towards development of geometric calibration and dynamic identification methods for … | https://theses.hal.science/tel-05133384… | 1 | Dec 25, 2025 08:00 | active | |

Towards development of geometric calibration and dynamic identification methods for anthropomorphic robots - TEL - Thèses en ligneURL: https://theses.hal.science/tel-05133384v1 Description: This thesis addresses geometric calibration and dynamic identification for anthropomorphic robotic systems, focusing on mobile manipulators and humanoid robots. For the TIAGo mobile manipulator, it introduces a comprehensive approach combining geometric calibration, suspension modeling, and backlash compensation, resulting in a 57% reduction in end-effector positioning RMSE. For the TALOS humanoid, it presents a whole-body calibration method using plane-constrained contacts and the novel IROC algorithm for optimal posture selection. A major contribution is FIGAROH, an open-source Python toolbox for unified calibration and identification across various robotic systems. FIGAROH features automatic generation of optimal calibration procedures, diverse parameter estimation methods, and validation tools. Extensive experiments on TIAGo, TALOS, and other platforms demonstrate significant improvements in accuracy and model fidelity. This research advances robot calibration and ide! ntification, offering theoretical insights and practical tools for a wide range of anthropomorphic systems, with potential applications in industrial and human-robot interaction scenarios. Content:

This thesis addresses geometric calibration and dynamic identification for anthropomorphic robotic systems, focusing on mobile manipulators and humanoid robots. For the TIAGo mobile manipulator, it introduces a comprehensive approach combining geometric calibration, suspension modeling, and backlash compensation, resulting in a 57% reduction in end-effector positioning RMSE. For the TALOS humanoid, it presents a whole-body calibration method using plane-constrained contacts and the novel IROC algorithm for optimal posture selection. A major contribution is FIGAROH, an open-source Python toolbox for unified calibration and identification across various robotic systems. FIGAROH features automatic generation of optimal calibration procedures, diverse parameter estimation methods, and validation tools. Extensive experiments on TIAGo, TALOS, and other platforms demonstrate significant improvements in accuracy and model fidelity. This research advances robot calibration and ide! ntification, offering theoretical insights and practical tools for a wide range of anthropomorphic systems, with potential applications in industrial and human-robot interaction scenarios. Cette thèse aborde la calibration géométrique et l'identification dynamique des systèmes robotiques anthropomorphes, en se concentrant sur les manipulateurs mobiles et les robots humanoïdes. Pour le manipulateur mobile TIAGo, elle propose une approche globale combinant calibration géométrique, modélisation de la suspension et compensation du jeu mécanique, aboutissant à une réduction de 57% de la RMSE du positionnement de l'effecteur. Pour le robot humanoïde TALOS, elle présente une méthode de calibration globale utilisant des contacts plan-contraints et l'algorithme novateur IROC pour la sélection optimale des postures. Une contribution majeure est FIGAROH, un outil open-source en Python pour la calibration et l'identification unifiées à travers divers systèmes robotiques. FIGAROH permet la génération automatique de procédures de calibration optimales, propose diverses méthodes d'estimation de paramètres et des outils de validation. Des expérience! s approfondies sur TIAGo, TALOS et d'autres plateformes démontrent des améliorations significatives en précision et en fidélité des modèles. Cette recherche fait progresser la calibration et l'identification des robots, offrant des perspectives théoriques et des outils pratiques pour une large gamme de systèmes anthropomorphes, avec des applications potentielles dans les domaines industriels et d'interaction humain-robot. Connectez-vous pour contacter le contributeur https://laas.hal.science/tel-05133384 Soumis le : jeudi 5 juin 2025-16:45:43 Dernière modification le : mercredi 22 octobre 2025-18:04:09 Archivage à long terme le : samedi 6 septembre 2025-19:05:39 Contact Ressources Informations Questions juridiques Portails CCSD

Images (1):

|

|||||

| 7 Ways Python Is Powering the Next Wave of Robotics … | https://python.plainenglish.io/7-ways-p… | 0 | Dec 25, 2025 08:00 | active | |

7 Ways Python Is Powering the Next Wave of Robotics InnovationDescription: There’s a lot of hype in robotics. Everyone wants to talk about humanoids doing backflips or robot dogs that can dance. But what most people miss is the quiet... Content: |

|||||

| Florida deploys robot rabbits to control invasive Burmese python population … | https://www.cbsnews.com/news/burmese-py… | 1 | Dec 25, 2025 08:00 | active | |

Florida deploys robot rabbits to control invasive Burmese python population - CBS NewsURL: https://www.cbsnews.com/news/burmese-pythons-robot-rabbits-florida-invasive-species/ Description: Burmese pythons pose a huge threat to native species in the Florida Everglades. Officials have used creative methods to manage the population of invasive snakes. Content: