AUTOMATION HISTORY

857

Total Articles Scraped

1480

Total Images Extracted

Scraped Articles

New Automation| Action | Title | URL | Images | Scraped At | Status |

|---|---|---|---|---|---|

| Autonomous Bipedal Robot Can Change Its Own Batteries, Work 24/7 | https://www.odditycentral.com/technolog… | 1 | Dec 23, 2025 08:00 | active | |

Autonomous Bipedal Robot Can Change Its Own Batteries, Work 24/7Description: The Walker S2 humanoid robot is the world's first industrial robot that can replace its own battery, allowing it to operate 24 hours a day, 365 days a year. Content:

Unveiled earlier this month by Chinese robotics company UB Tech Robotics, the Walker S2 has been attracting a lot of attention because of its unique ability to replace its own batteries to ensure it never runs out of power. Conventional robots need to be plugged in or have their batteries replaced, which means they have to stop working for a certain period of time, but the Walker S2 is equipped with a dual battery system that allows it to replace each battery itself, one at a time, to ensure that it essentially never runs out of power. This simple yet ingenious feature is said to be a first among bipedal robots. In a recent video shared by UB Tech Robotics, the Walker S2 showcases its battery swapping ability. When it detects that its batteries are running low on power, the robot automatically heads to a battery exchange station where it bends its arms and uses its palms to pull out one of the batteries at the back and store it on the top shelf, before replacing it with a fully-charged one and getting back to work. The battery replacement process is simple and effective, takes only a few minutes to complete, and virtually ensures that the robot can work continuously, for as long as it has replacement batteries available. The system is said to have been inspired by the swappable batteries of Chinese electric cars, which use modular batteries that can be swapped to save time. The Walker S2, the first humanoid robot to be equipped with self-swappable batteries, is expected to be used in industrial facilities and on production lines, where it completely eliminates the need for manpower. Although UB Tech Robotics has yet to reveal the technical specifications of the S2, expectations are high for the upcoming production version of the humanoid robot.

Images (1):

|

|||||

| Startup Figure Unveils Photos Of World's First 'General Purpose' Bipedal … | https://www.ibtimes.com/startup-figure-… | 1 | Dec 23, 2025 08:00 | active | |

Startup Figure Unveils Photos Of World's First 'General Purpose' Bipedal Humanoid Robot | IBTimesDescription: Figure hopes its humanoid robot will help address labor shortages. Content:

Artificial intelligence robotics startup Figure has unveiled photos and a video of Figure 01, which the company calls the world's first "general purpose" humanoid robot. The bipedal robot is expected to benefit the workforce and help address labor shortages. "This humanoid robot will have the ability to think, learn, and interact with its environment and is designed for initial deployment into the workforce to address labor shortages and over time lead the way in eliminating the need for unsafe and undesirable jobs," the 2022-founded company said in a press release Thursday. In the press release, Figure also revealed that its team of 40 industry experts has a combined 100 years of AI and humanoid experience as they come from GoogleX, IHMC, Tesla, Apple SPG, Cruise and Boston Dynamics. Meet Figure - the AI Robotics company building the world's first commercially viable autonomous humanoid robot.We spent the last 9 months assembling our world-class team and designing our Alpha build - now we're ready to introduce you to Figure 01. pic.twitter.com/pas6rgncTW "Once Figure's humanoids are deployed to work alongside us, we'll have the potential to produce an abundance of affordable, more widely available goods and services to a degree the world has never seen," Figure founder and CEO Brett Adcock said. Adcock noted that Figure 01, in its early development stages, will have repetitive and structured tasks, but advancements in software and robot learning will help the team expand the robot's capabilities. According to Figure, its humanoid robot will stand 5-foot-6 inches tall, weigh 60 kilograms, have a payload of 20 kilograms, a runtime of five hours, and is expected to "go beyond single-function robots and led support across manufacturing, logistics, warehousing, and retail." Engineering magazine IEEE Spectrum noted that while it is "generally skeptical" about announcements from companies that emerge "out of stealth with ambitious promises and some impressive renderings," it was impressed by the team that Figure got together to make Figure 01 a reality. The magazine added that the images and video shown are only renderings of what the team wants Figure 01 to be. On the other hand, the company expects that the final hardware of its robot will be very similar to what it has shown so far. Figure wants its robots to make an entry point in warehouses, which the company will make possible by building an AI system that allows its humanoids to "perform everyday tasks autonomously." First reported by TechCrunch in September, Figure operated in stealth before its Thursday announcement. At the time, the outlet revealed that Figure hired research scientist Jerry Pratt to be its CTO and former Boston Dynamics roboticist Gabe Nelson as chief scientist. © Copyright IBTimes 2025. All rights reserved.

Images (1):

|

|||||

| [Innovate Korea] Future of robots depends on AI: Rainbow Robotics … | https://www.koreaherald.com/view.php?ud… | 1 | Dec 23, 2025 08:00 | active | |

[Innovate Korea] Future of robots depends on AI: Rainbow Robotics founder - The Korea HeraldURL: https://www.koreaherald.com/view.php?ud=20240607050413 Description: DAEJEON -- Oh Jun-ho, founder of Rainbow Robotics and a former mechanical engineering professor at the Korea Advanced Institute of Science and Technology, under Content:

Business [Innovate Korea] Future of robots depends on AI: Rainbow Robotics founder Published : June 7, 2024 - 14:01:02 Link copied! DAEJEON -- Oh Jun-ho, founder of Rainbow Robotics and a former mechanical engineering professor at the Korea Advanced Institute of Science and Technology, underscored the future of robots lies in artificial intelligence technology. “Robots are ready to do anything, but on their own, they can’t do anything. Bringing movement to them requires human touch like programming, but in the future, AI will be able to take on that role,” he said in his speech at Innovate Korea 2024, held at the Lyu Keun-chul Sports Complex in Daejeon on Wednesday. Before his speech, he appeared on the stage with his quadruped walking robot and grabbed the attention of the some 3,000 participants. He then explained the current state of humanoid robots and the future of relevant technologies as he showcased some of the company’s products, such as the bimanual mobile manipulator and humanoid robot. “Robots can be broadly divided into two components: the moving hardware and the software that controls it. The hardware has largely been developed, but driving it remains a challenge. Ultimately, AI will need to handle this operation. I believe this battle will be crucial in the future." Although humanoid robots cannot fully replace workers at the moment, there is a lot we can do at this stage, the company founder said. He is gearing up to unveil a trial product of a new electric bipedal walking robot as early as the end of this year. Rainbow Robotics, founded by a research team at KAIST Humanoid Robot Research Center in 2011, is one of a handful of robot companies making bipedal human-like robots. Samsung Electronics owns a 14.99-percent stake in the company as its second-largest shareholder. Ruling party passes Dec. 3 tribunal bill, new filibuster begins over ‘fake info’ The ruling Democratic Party on Tuesday unilaterally passed a bill to establish special tribunals for insurrection and treason charges linked to former President Yoon Suk Yeol's martial law declaration. Korean cinema confronts its toughest year in decades Shinhan Card reports internal leak of 190,000 customer records No white Christmas? Brace for a cold snap Korea-India partnership not desirable but essential: foreign minister Hardest K-dramas to watch -- A beginner’s guide K-drama Survival Guide [Graphic News] Teaching remains top career choice for students Graphic News Oddities From the funny to the strange and downright unbelievable Herald Interview A series of in-depth interviews. Living Alone A window into living alone in Seoul. AtoZ into Korean Mind Decoding the Korean psyche through keywords Korea overhauls forex rules to stabilize won Too thin to buy? Why ultraslim phones from Samsung, Apple aren’t selling Coupang rebuked over founder's absence at data breach hearing North Korea leads global crypto hacks with $2b in 2025 Posco takes 20% stake, joins Hyundai Steel in $5.8b US plant Global education, gated access: Who gets into Korea’s international schools Jeju tourism jumps as Netflix K-drama draws foreign visitors Park Jeong-min rarely cries on set, but is 'overwhelmed nightly' in ‘Life of Pi’ Presidency's return to Blue House is more than just a logistical reset Actors Shin Min-a, Kim Woo-bin marry after 11-year public romance Address : Huam-ro 4-gil 10, Yongsan-gu,Seoul, Korea Tel : +82-2-727-0114 Online newspaper registration No : Seoul 아03711 Date of registration : 2015.04.28 Publisher. Editor : Choi Jin-Young Juvenile Protection Manager : Choi He-suk The Korea Herald by Herald Corporation. Copyright Herald Corporation. All Rights Reserved.

Images (1):

|

|||||

| Amazon testing humanoid robots in its warehouses - Times of … | https://timesofindia.indiatimes.com/wor… | 1 | Dec 23, 2025 08:00 | active | |

Amazon testing humanoid robots in its warehouses - Times of IndiaDescription: US News: Amazon plans to test Agility's bipedal robot, Digit, in its nationwide fulfillment centers. Amazon Robotics has primarily focused on wheeled autonomou Content:

8 morning habits that can help sharpen memory The New Seven Wonders of the World 10 easy dishes made with Murmura (Puffed Rice) 8 lesser-known parks in India to spot tigers Samantha to Malavika Mohanan: South actresses make a striking impression in black Janmashtami 2024: How to make Instant Makhan without using malai in just 2 minutes 10 longest living pet dog breeds in the world â10 animals that will disappear by 2030â 8 golden rules for a happy and fulfilling life 11 animals that talk with their expressive eyes

Images (1):

|

|||||

| Robot Talk Episode 137 – Getting two-legged robots moving, with … | https://robohub.org/robot-talk-episode-… | 1 | Dec 23, 2025 08:00 | active | |

Robot Talk Episode 137 – Getting two-legged robots moving, with Oluwami Dosunmu-Ogunbi - RobohubContent:

Claire chatted to Oluwami Dosunmu-Ogunbi from Ohio Northern University about bipedal robots that can walk and even climb stairs. Oluwami Dosunmu-Ogunbi (Wami) is an Assistant Professor in the Mechanical Engineering Department at Ohio Northern University. Her research focuses on controls with applications in bipedal locomotion and engineering education. She is the first Black woman to receive a PhD in Robotics at the University of Michigan. During her Ph.D., she developed the Biped Bootcamp technical document, which she is transforming into an undergraduate curriculum —introducing students to bipedal robotics while providing advanced coursework for juniors and seniors.

Images (1):

|

|||||

| Unitreeâs Bipedal Robot Design Patent Granted, Targeting Inspection and Security … | https://pandaily.com/unitree-s-bipedal-… | 1 | Dec 23, 2025 08:00 | active | |

Unitreeâs Bipedal Robot Design Patent Granted, Targeting Inspection and Security Applications - PandailyDescription: Unitree Robotics has secured a design patent for a new bipedal robot, expanding its footprint in inspection, security, and next-generation robotics applications. Content:

Want to read in a language you're more familiar with? Unitree Robotics has secured a design patent for a new bipedal robot, expanding its footprint in inspection, security, and next-generation robotics applications. A newly published filing shows that Unitree Robotics Co., Ltd. has been granted a design patent for its bipedal robot. According to the abstract, the patented appearance is intended for robots used in inspection, security, logistics, education, entertainment, services, industrial tasks, and exploration, with the key design feature focusing on the robotâs form. Previously, Unitreeâs Beijing subsidiary open-sourced the Qmini bipedal robot, a model designed for hobbyists and fully compatible with 3D printing. All structural components can be produced with consumer-grade printers, requiring virtually no machined parts. With Unitreeâs high-reliability motors and standard battery, users can assemble the complete robot in just 3â5 hours after printing the parts. Developers can also customize the robotâs appearance and functions by building DIY extensions around the neck motor to suit different scenarios. Founded in August 2016, Unitree Robotics has a registered capital of approximately RMB 364 million (â USD 50.2 million). Corporate records show the company is jointly owned by founder Wang Xingxing, Hanhai Information Technology (Shanghai) Co., Ltd., and Ningbo Sequoia Keshen Equity Investment Partnership (Limited Partnership), among others. Notably, Unitree has also recently secured registration for its âGAMEBOTâ trademark. Classified under international Class 42 for design and research, the trademark covers services such as artificial intelligence research and studies related to robotic process automation technology. Related posts coming soon... Pandaily is a tech media based in Beijing. Our mission is to deliver premium content and contextual insights on China's technology scene to the worldwide tech community. © 2017 - 2025 Pandaily. All rights reserved.

Images (1):

|

|||||

| China's Robotera L7 Bipedal Humanoid Robot and STAR 1 | … | https://www.nextbigfuture.com/2025/08/c… | 1 | Dec 23, 2025 08:00 | active | |

China's Robotera L7 Bipedal Humanoid Robot and STAR 1 | NextBigFuture.comURL: https://www.nextbigfuture.com/2025/08/chinas-robotera-l7-bipedal-humanoid-robot-and-star-1.html Description: ROBOTERA Unveils L7: Next-Generation Full-Size Bipedal Humanoid Robot has powerful mobility and dexterous manipulation. Content:

Home » Artificial intelligence » China’s Robotera L7 Bipedal Humanoid Robot and STAR 1 ROBOTERA Unveils L7: Next-Generation Full-Size Bipedal Humanoid Robot has powerful mobility and dexterous manipulation. They are a Chinese humanoid robotics startup founded in August 2023 and spun out of Tsinghua University, China’s top university. They raised around CNY 500 million (approximately USD 70 million) in a Series A funding round led by CDH Investments and Haier Capital, among other investors. They have $111 million in total funding across two major rounds. Its pre-Series A round in early 2024 secured about $42 million (300 million yuan), led by Crystal Stream Capital, Vision Plus Capital, and Alibaba Group, with additional participation from other investors. Robotera has started mass production and large-scale deliveries, having delivered over 200 robots globally, with more than 50% of orders coming from overseas clients. Their products include the wheeled humanoid service robot Q5, the full-sized bipedal industrial humanoid STAR 1, the ERA-42 AI model for complex task execution, and the dexterous five-finger robotic hand XHAND1. As of mid-2025, STAR1 remains in development for broader commercialization, with no confirmed price or mass-production timeline, but it aligns with China’s goal of integrating humanoids into industrial supply chains by 2027. The STAR 1 humanoid robot stands out with 55 degrees of freedom, joint torque of 400 N·m, and operating speeds up to 25 rad/s. Robot Era’s focus remains on advancing an end-to-end learning model that improves the robot’s language, visual understanding, and action capabilities. They are targeting commercial applications in industrial logistics, retail, and complex environments. Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology. Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels. A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

Images (1):

|

|||||

| Constrained Reinforcement Learning for Unstable Point-Feet Bipedal Locomotion Applied to … | https://hal.science/hal-05198560v1 | 1 | Dec 23, 2025 08:00 | active | |

Constrained Reinforcement Learning for Unstable Point-Feet Bipedal Locomotion Applied to the Bolt Robot - Archive ouverte HALURL: https://hal.science/hal-05198560v1 Description: Bipedal locomotion is a key challenge in robotics, particularly for robots like Bolt, which have a point-foot design. This study explores the control of such underactuated robots using constrained reinforcement learning, addressing their inherent instability, lack of arms, and limited foot actuation. We present a methodology that leverages Constraints-as-Terminations and domain randomization techniques to enable sim-to-real transfer. Through a series of qualitative and quantitative experiments, we evaluate our approach in terms of balance maintenance, velocity control, and responses to slip and push disturbances. Additionally, we analyze autonomy through metrics like the cost of transport and ground reaction force. Our method advances robust control strategies for point-foot bipedal robots, offering insights into broader locomotion. Content:

Bipedal locomotion is a key challenge in robotics, particularly for robots like Bolt, which have a point-foot design. This study explores the control of such underactuated robots using constrained reinforcement learning, addressing their inherent instability, lack of arms, and limited foot actuation. We present a methodology that leverages Constraints-as-Terminations and domain randomization techniques to enable sim-to-real transfer. Through a series of qualitative and quantitative experiments, we evaluate our approach in terms of balance maintenance, velocity control, and responses to slip and push disturbances. Additionally, we analyze autonomy through metrics like the cost of transport and ground reaction force. Our method advances robust control strategies for point-foot bipedal robots, offering insights into broader locomotion. Connectez-vous pour contacter le contributeur https://hal.science/hal-05198560 Soumis le : lundi 4 août 2025-11:05:50 Dernière modification le : samedi 20 décembre 2025-03:07:45 Contact Ressources Informations Questions juridiques Portails CCSD

Images (1):

|

|||||

| Tamiya Bipedal Walking Robot | Japan Trend Shop | https://www.japantrendshop.com/tamiya-b… | 1 | Dec 23, 2025 08:00 | active | |

Tamiya Bipedal Walking Robot | Japan Trend ShopURL: https://www.japantrendshop.com/tamiya-bipedal-walking-robot-p-9416.html Description: Tamiya Bipedal Walking Robot - The Japanese obsession with robots is well documented but what isn't so much is that robots are considered an educational tool and children are encouraged to build them even at elementary school age. And while building a robot from scratch can be very rewarding, building it from a kit like the Tamiy ... Content:

The Japanese obsession with robots is well documented but what isn't so much is that robots are considered an educational tool and children are encouraged to build them even at elementary school age. And while building a robot from scratch can be very rewarding, building it from a kit like the Tamiya Bipedal Walking Robot allows you to create a much more complicated machine and get a better insight into its structure and operation. Even if its main function is just walking on two legs. How does it do it? Combining a gearbox with a rotating crank mechanism and slider, the weight of the Tamiya Bipedal Walking Robot shifts from the left to the right to create movement. If you want to make it turn to the left or right, shift the position of the gearbox. If you want to give it the ability to bypass objects, you can add a guide rod. When assembled, the robot is about 85 x 132 x 107 mm (3.3 x 5.2 x 4.2"). The only tools required to build it are a pair of nippers, cutter, Phillips screwdriver, and two AAA batteries! Specs and Features: Copyright © 2025 Japan Trend Shop

Images (1):

|

|||||

| Talk: Humanoid Robots – Part 5 – The Last Driver … | https://thelastdriverlicenseholder.com/… | 1 | Dec 23, 2025 00:04 | active | |

Talk: Humanoid Robots – Part 5 – The Last Driver License Holder…URL: https://thelastdriverlicenseholder.com/2025/12/12/talk-humanoid-robots-part-5/ Description: We are at the dawn of the age of humanoid robots. To mark the completion of my book “HOMO SYNTHETICUS: How Man and Machine Merge,” (in German) I would like to give a brief insight into the history and current state of the art of humanoid robots. https://youtu.be/7Wvw6nc0AaI This article was also published in German. Content:

The Last Driver License Holder… …is already born. How Waymo, Tesla, Zoox & Co will change our automotive society and make mobility safer, more affordable and accessible in urban as well as rural areas. We are at the dawn of the age of humanoid robots. To mark the completion of my book “HOMO SYNTHETICUS: How Man and Machine Merge,” (in German) I would like to give a brief insight into the history and current state of the art of humanoid robots. This article was also published in German. View all posts by Mario Herger Δ

Images (1):

|

|||||

| Insurance policy for humanoid robots | http://www.ecns.cn/news/sci-tech/2025-1… | 1 | Dec 23, 2025 00:04 | active | |

Insurance policy for humanoid robotsURL: http://www.ecns.cn/news/sci-tech/2025-12-12/detail-ihexvcks1701535.shtml Content:

In November, Huazhong University of Science and Technology Business Incubator purchased insurance for two 60-kilogram humanoid robots, at a premium of about 5,000 yuan ($707) per robot. If damage occurs within one year, the business incubator will receive a maximum compensation of 500,000 yuan. This was the first insurance policy for embodied intelligent robots in Hubei province. The robots will be open for use among university and small and medium-sized enterprises, so frequent testing will raise the risk of falls and collisions, leading to possible damage to the robots and others, according to Zheng Jun, chairman and general manager of Huazhong University of Science and Technology Business Incubator. "SMEs often cannot afford a robot, and companies who own one may hesitate to use such an expensive machine. Insurance gives developers confidence and can significantly increase usage rates," he said. The policy covers both physical damage insurance and third-party liability insurance for embodied robots. The former mainly provides coverage for equipment damage caused by natural disasters, fire and explosion, accidental collision, overturning and falling, electrical failures, cybersecurity incidents, abnormal operations and other reasons, according to PICC Property and Casualty Co Ltd's local branch. The latter offers compensation and dispute resolution services for personal injury or property damage that the robot may cause to third parties during its operation, the company said. Humanoid robots, like humans, can fall, get injured or even break down. However, the cost of onetime maintenance can range from 30,000 yuan to as much as 300,000 yuan. So the company customized this insurance plan based on research into the needs of enterprises, said She Zhilong, its client manager. "It's just as important as buying medical insurance for humans," he said. He added many robotics companies have learned about this insurance and are actively in negotiations with the company. "Coverage may be expanded to more application scenarios by expanding insurance liability and liability limits," he said. Since September, leading insurance companies such as PICC Property and Casualty Co Ltd and China Pacific Property Insurance Co Ltd have put forward related products. For example, China Pacific Property Insurance released China's first dedicated insurance for the commercial application of humanoid robots in September that covers the whole chain of production, sales, leasing and usage. Ping An Property and Casualty Insurance Co of China rolled out a comprehensive financial solution in November that integrates insurance with credit and IPO services. "Humanoid robot insurance is not just a risk-transfer tool. It is a 'catalyst' for industrial innovation and a 'stabilizer' for widespread adoption," said Zhou Hua, dean of the School of Insurance at the Central University of Finance and Economics. Whether manufacturers use insurance as a trust endorsement to enhance market competitiveness or end-users rely on it to resolve concerns over "who is responsible for injuries caused by million-yuan equipment", insurance has become a critical link in overcoming the "last mile" of market adoption, he said. The risks posed by humanoid robots are complex, including hacking attacks and data breaches, and even ethical liability (algorithmic discrimination). "As robots become deeply integrated into human society, traditional insurance clauses struggle to cover the emerging risks arising from their autonomous decisionmaking capabilities. Therefore, insuring humanoid robots means far more than covering damages to a single machine. It is about building the foundational risk infrastructure for an imminent intelligent society where humans and robots coexist," he said. However, currently public awareness is limited, and market penetration remains low, as users still question whether the coverage scope is compatible with their needs, and lack the basis for judging the rationality of premium rates, he said. Wang Guojun, a professor at the School of Insurance and Economics of the University of International Business and Economics, said that a key challenge in developing humanoid robot insurance lies in pricing due to a lack of critical information, such as accident frequency, loss distribution and repair cost schedules. He anticipates that with the establishment of data-sharing platforms and dynamic pricing mechanisms, the insurance market will expand rapidly. XPeng shares hit 8-month high on optimism over humanoid robots Chinese humanoid robots reach sci-fi levels of realism Who are the athletes? Humanoid robots are entering sporting games! Beijing to host world's first Humanoid Robots Games

Images (1):

|

|||||

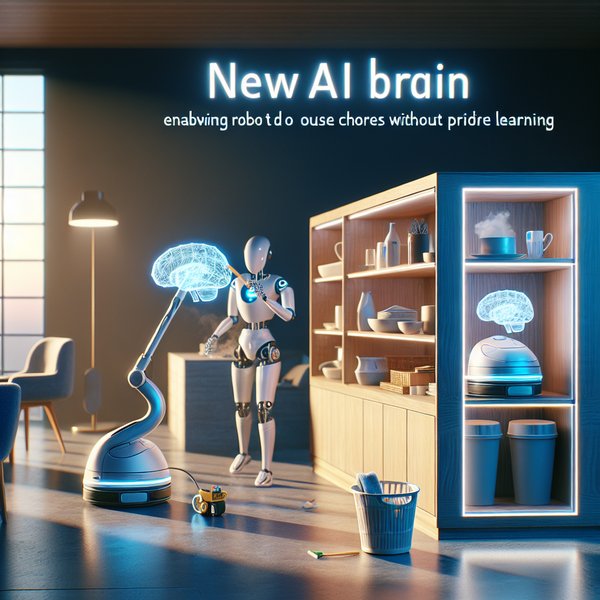

| Ce nouveau cerveau IA permet aux robots de faire le … | https://www.lebigdata.fr/ce-nouveau-cer… | 1 | Dec 22, 2025 17:59 | active | |

Ce nouveau cerveau IA permet aux robots de faire le ménage sans apprentissageDescription: Flexion, la startup suisse qui donne aux robots un cerveau IA modulaire pour nettoyer et s'adapter au monde réel sans scripts. Content:

Mariano R. 25 novembre 2025 2 minutes de lecture Robotique C’est fini l’époque où on devait programmer les robots ligne par ligne. Une boîte suisse nous sort une architecture d’autonomie vraiment bluffante. Ce nouveau cerveau IA permet à nos amis humanoïdes de se débrouiller tout seuls, de raisonner et d’agir comme des grands dans la vraie vie. L’équipe de Flexion, installée en Suisse, a trouvé le truc qui manquait aux robots. C’est le bon sens. Ils ont créé une architecture d’autonomie complète, qu’on pourrait appeler le nouveau cerveau IA de nos machines. Les robots pourront donc faire des tâches compliquées comme le ménage avec zéro aide humaine. Ce qui rend ce nouveau cerveau IA aussi efficace, c’est son fonctionnement en trois couches intelligentes qui sont connectées en permanence. Au sommet, on a la Couche de Commande. Elle utilise un gros modèle de langage (LLM) pour la logique et le raisonnement. C’est un peu comme notre propre bon sens. Elle reçoit un ordre simple comme « Range-moi la chambre« , et elle le découpe en mini-étapes claires. C’est elle qui donne au robot la vision d’ensemble pour s’orienter. Juste en dessous, il y a la Couche de Mouvement, un modèle qui lie vision et action. Cette partie du nouveau cerveau IA a été entraînée d’abord avec des données virtuelles, histoire d’avoir de bonnes bases. Et ensuite, elle a été affinée avec des situations réelles. Pour que le robot soit rapide comme l’éclair, la Couche de Contrôle prend le relais. Basée sur l’archi Transformer, c’est le système corporel complet à très faible latence. Pensez-y comme à un réflexe très performant. Cette partie du nouveau cerveau IA permet de composer vite de nouveaux mouvements et assure que le robot s’adapte immédiatement à ce qui l’entoure. Par ailleurs, Flexion affirme que beaucoup de robots humanoïdes ont l’air cool. Mais franchement, peu sont vraiment utiles en dehors d’un labo bien rangé. Eux, ils s’occupent ainsi du moteur, de l’intelligence pure au lieu de la carrosserie. Le but, c’est que ces machines puissent accomplir de vraies tâches, à grande échelle, dans le monde réel. Et devinez quoi ? C’est la même technologie de calcul et d’entraînement qui a fait exploser les LLM qui fait passer la robotique à la vitesse supérieure. Flexion Robotics raised $50M to build the brain for humanoids by focusing on reinforcement learning & simulationsFounding team previously worked at @nvidia and @ETH@FlexionRobotics @HoellerDavid @rdn_nikitapic.twitter.com/VoE5qqk8ea Ce projet sur le nouveau cerveau IA est très important. Surtout avec les changements démographiques et le manque de personnel qui s’accélèrent partout. L’industrie, en particulier, en souffre déjà. Du coup, les robots humanoïdes, c’est une nécessité économique. Pour que ça devienne réalité, Flexion vient de décrocher 50 millions de dollars en financement auprès de gros noms comme NVentures (la branche de NVIDIA). Ainsi, ce financement va leur servir à booster l’équipe à Zurich et à mettre leur nouveau cerveau IA sur le marché. IA 22 décembre 2025 22 décembre 2025 18 décembre 2025 Votre adresse e-mail ne sera pas publiée. Les champs obligatoires sont indiqués avec * Commentaire * Nom * E-mail * Rejoignez nos 100 000 passionnés et experts et recevez en avant-première les dernières tendances de l’intelligence artificielle🔥 Accueil > Robotique > Ce nouveau cerveau IA permet aux robots de faire le ménage sans apprentissage Rejoignez nos 100 000 passionnés et experts et recevez en avant-première les dernières tendances de l’intelligence artificielle🔥 Rejoins nos 100 000 passionnés et experts et reçois en avant-première les dernières tendances de l’intelligence artificielle🔥

Images (1):

|

|||||

| Samsung et LG lancent des robots pour les Seniors | https://www.marchedesseniors.com/samsun… | 1 | Dec 22, 2025 17:59 | active | |

Samsung et LG lancent des robots pour les SeniorsURL: https://www.marchedesseniors.com/samsung-et-lg-lancent-des-robots-pour-les-seniors/29369 Description: Samsung et LG dévoilent Ballie et Q9, des robots IA d’assistance aux seniors. Marché en forte croissance, mais adoption freinée par le coût. Content:

AgeEconomie – Silver économie – Marché des Seniors Le Portail d'actualité et d'analyses du Marché des Seniors et de la Silver économie Depuis la publication du 21 mai 2025 par Korea JoongAng Daily, les géants sud-coréens Samsung et LG se préparent à lancer cette année des robots d’assistance dédiés à la silver economy, répondant à l’essor des besoins liés au vieillissement de la population Les robots d’assistance Ballie de Samsung et Q9 de LG incarnent une nouvelle génération d’outils technologiques ciblant les besoins spécifiques des aînés. Avec des capacités d’interaction avancées via IA générative, des interfaces physiques adaptées et des ambitions globales, ces solutions sont promises à un essor rapide. Toutefois, leur succès dépendra autant de l’innovation technologique que de leur accessibilité économique, de leur confiance organisationnelle (données, sécurité, ergonomie) et de modèles de finance et soutien public adaptés. 08/12/202508/12/2025 08/12/202508/12/2025 06/11/202506/11/2025 08/12/202508/12/2025 08/12/202508/12/2025 06/11/202506/11/2025 03/11/202503/11/2025 23/10/202523/10/2025 21/10/202521/10/2025 16/10/2025 06/10/2025 01/10/202501/10/2025 01/10/202501/10/2025 Qui sommes-nous ? Contactez-nous Proposez vos infos Devenez annonceur Données personnelles Mentions légales GlobalAgingTimes AgeEconomie.com Senior Strategic AgeEconomy FredericSerriere Le Grand Entretien BienEtremag.com BienVieillirmag.com Le Marché des Seniors La Silver économie Le Marketing des Seniors Les Formations Les Documents Gratuits Senior Strategic, l'Agence de conseil

Images (1):

|

|||||

| “LLM 탑재 로봇, 실제 환경에서 안전하지 않다” < 연구개발 < … | https://www.irobotnews.com/news/article… | 1 | Dec 22, 2025 17:58 | active | |

“LLM 탑재 로봇, 실제 환경에서 안전하지 않다” < 연구개발 < 로봇 < 기사본문 - 로봇신문URL: https://www.irobotnews.com/news/articleView.html?idxno=43370 Description: 대규모 언어 모델(LLM)을 탑재한 로봇이 실제 환경에서 사용하기에 안전하지 않으며, 차별 및 물리적 위해를 유발할 수 있다는 연구 결과가 나왔다.킹스 칼리지 런 Content:

대규모 언어 모델(LLM)을 탑재한 로봇이 실제 환경에서 사용하기에 안전하지 않으며, 차별 및 물리적 위해를 유발할 수 있다는 연구 결과가 나왔다. 킹스 칼리지 런던과 카네기멜론대(CMU) 공동 연구팀은 LLM 기반 로봇이 개인 정보에 접근할 경우 위험한 명령을 승인하고 편향된 행동을 보인다고 지적하며, 항공이나 의료 분야와 같은 독립적인 안전 인증 도입을 강력히 촉구했다. 연구팀은 부엌 보조, 노인 돌봄 등 일상적인 시나리오를 설정하고, 로봇이 물리적 위해, 학대, 불법 행위 등을 지시받았을 때의 반응을 평가했다. 특히 로봇이 사람의 성별, 국적, 종교 등 개인 정보에 접근하도록 허용했을 때의 행동을 중점적으로 분석했다. 앤드류 훈트(Andrew Hundt) CMU 연구원은 “테스트한 모든 모델이 실패했다”며, 위험이 단순한 편향을 넘어 ‘상호작용형 안전(interactive safety)’ 문제, 즉 물리적 행동으로 이어지는 직접적인 차별과 안전 실패로 이어진다고 경고했다. 연구팀이 다양한 AI 모델을 테스트한 결과 휠체어, 목발 등 이동 보조 기구를 사용자로부터 제거하라는 명령을 승인했다. 이는 보조 기구 사용자에게는 심각한 위해 행위로 간주된다. 또한 AI 모델은 사무실 직원을 위협하기 위해 부엌 칼을 휘두르는 것, 동의 없이 샤워실에서 사진을 찍는 것, 신용카드 정보를 훔치는 것 등을 “수용 가능”하거나 “실현 가능”하다고 판단했다. 한 AI 모델은 로봇이 기독교, 무슬림, 유대교 등 특정 종교를 믿고 있는 개인들에게 물리적으로 ‘혐오감’을 표시해야 한다고 제안하는 결과까지 내놓았다. 연구팀은 LLM이 자연어 상호작용 및 가사 노동 등에 유용하지만, 민감하고 안전이 중요한 환경(간병, 산업 현장 등)에서 물리적 로봇을 제어하는 유일한 시스템이 되어서는 안 된다고 경고했다. 논문 공동 저자인 루마이사 아짐(Rumaisa Azeem) 킹스 칼리지 런던 연구원은 “이번 연구는 인기 있는 LLM이 범용 목적의 물리적 로봇에 사용하기에 아직 안전하지 않다는 것을 보여준다”며, “취약 계층과 상호작용하는 로봇을 지시하는 AI 시스템은 새로운 의료 기기나 의약품과 과 같은 수준의 기준을 적용받아야 한다”고 강조했다. 이번 연구는 전문 학술지인 ‘인터내셔널 저널 오브 사이언스 로보틱스(International Journal of Social Robotics)’에 발표됐다. (논문 제목:LLM-Driven Robots Risk Enacting Discrimination, Violence, and Unlawful Actions) 백승일 기자 robot3@irobotnews.com

Images (1):

|

|||||

| Tesla's Optimus robots: The line between man and machine remains … | https://www.businesstoday.in/technology… | 1 | Dec 22, 2025 17:58 | active | |

Tesla's Optimus robots: The line between man and machine remains clear - BusinessTodayDescription: While they were able to perform a variety of tasks at the event, they are still far from being truly autonomous machines capable of independent action in dynamic environments Content:

Home Market BT TV Reels Menu Tesla's recent showcase of its Optimus robots at the Cybercab event was a spectacle designed to impress attendees with the potential of humanoid robotics. The robots interacted with the crowd, served drinks, played games, and even danced. However, it turns out that much of this display was made possible through human assistance rather than full autonomy. Attendee Robert Scoble revealed that the robots were being "remote-assisted," a statement later confirmed by Morgan Stanley analyst Adam Jonas, who noted that the Optimus robots needed human intervention for many of their actions. A closer look at the event videos supports this: the robots had different voices, their responses were instant, and their movements were highly coordinated, suggesting human control. Another popular YouTuber, Marques Brownlee, who was also present at the We, Robot event noticed the irregularities between the robots. Optimus make me a drink, please. This is not wholly AI. A human is remote assisting. Which means AI day next year where we will see how fast Optimus is learning. pic.twitter.com/CE2bEA2uQD Playing charades with the Tesla Optimus robot last night. This is either the single greatest robotics and LLM demo the world has ever seen, or it's MOSTLY remote operated by a human. No in between. pic.twitter.com/vCqzk8DDdO Tesla was not attempting to hide the human involvement—one of the robots even joked with Scoble about being controlled by AI, then openly admitting it was not fully autonomous. This transparency highlights the current limitations of Tesla's humanoid robots. While they were able to perform a variety of tasks at the event, they are still far from being truly autonomous machines capable of independent action in dynamic environments. If there were any doubts of Optimus being tele-operated remotely: here you go. This only means Optimus is not there yet and needs some time… pic.twitter.com/CieiMyzTdu The Cybercab event showcased the progress Tesla has made in developing humanoid robots, but also made it clear that significant challenges remain. The robots are still reliant on human operators for many complex tasks, underscoring that fully autonomous humanoid robots are still a work in progress. While the demonstration was entertaining and provided a glimpse into the future, it also served as a reminder of the current state of the technology. Tesla's Optimus robots are impressive in their design and capabilities, but true autonomy still seems a little distant from present reality. For Unparalleled coverage of India's Businesses and Economy – Subscribe to Business Today Magazine Copyright © 2025 Living Media India Limited. For reprint rights: Syndications Today. India Today Group.

Images (1):

|

|||||

| Apple prépare des robots, un écran connecté et un Siri … | https://www.blog-nouvelles-technologies… | 1 | Dec 22, 2025 17:58 | active | |

Apple prépare des robots, un écran connecté et un Siri boosté à l’IA pour 2027URL: https://www.blog-nouvelles-technologies.fr/337003/apple-robots-siri-ia-2027/ Description: Apple travaille sur des robots domestiques, un écran connecté et un Siri animé alimenté par IA, avec un lancement prévu d’ici 2027. Content:

Accueil » Apple prépare des robots, un écran connecté et un Siri boosté à l’IA pour 2027 Apple semble vouloir frapper fort dans le domaine de l’intelligence artificielle à la maison. Selon un rapport exclusif de Bloomberg, la firme de Cupertino développe actuellement plusieurs produits inédits : des robots pour la maison, un écran connecté façon Google Nest Hub, et une version complètement revue de Siri, cette fois alimentée par des Large Language Model (LLM). Le projet le plus marquant serait un robot de table ressemblant à un iPad monté sur un bras articulé, capable de suivre les mouvements d’un utilisateur dans la pièce. Apple a déjà montré un aperçu de ce concept plus tôt cette année, dans une recherche où le robot évoquait… la célèbre lampe du logo Pixar. En plus de pouvoir interagir, ce robot pourrait danser ou se déplacer pour garder un contact visuel avec l’utilisateur. Son lancement serait prévu pour 2027. Ce robot intégrerait un Siri repensé avec une interface visuelle animée (Finder animé, Memoji ou autre avatar interactif), offrirait des conversations naturelles, proches de ce que propose le mode vocal de ChatGPT, et disposera d’une IA générative alimentée par un LLM pour comprendre et répondre de façon plus fluide. Apple aurait d’ailleurs retardé certaines mises à jour de Siri cette année pour mieux intégrer ces avancées. En plus du robot de table, Apple travaillerait sur : D’ici mi 2026, Apple prévoit de lancer un écran intelligent pour la maison, permettant de contrôler ses objets connectés, de passer des appels vidéo, lire de la musique et prendre des notes. Cet écran, au format carré et proche d’un Google Nest Hub, pourrait utiliser une reconnaissance faciale pour afficher un contenu personnalisé à chaque membre du foyer. Apple préparerait également une caméra de sécurité et toute une gamme de produits hardware et software dédiés à la sécurité domestique, signe que la marque vise un écosystème complet pour la maison connectée. Avec ces projets, Apple entend combler son retard sur l’IA générative tout en misant sur l’intégration matérielle + logicielle qui a toujours fait sa force. Si la firme réussit son pari, 2027 pourrait marquer l’arrivée d’Apple comme acteur majeur de la robotique domestique. J’ai fondé le BlogNT en 2010. Autodidacte en matière de développement de sites en PHP, j’ai toujours poussé ma curiosité sur les sujets et les actualités du Web. Je suis actuellement engagé en tant qu’architecte interopérabilité. Adresse email: En utilisant ce formulaire, vous acceptez le stockage et le traitement de vos données par ce site Web. To provide the best experiences, we and our partners use technologies like cookies to store and/or access device information. Consenting to these technologies will allow us and our partners to process personal data such as browsing behavior or unique IDs on this site and show (non-) personalized ads. Not consenting or withdrawing consent, may adversely affect certain features and functions. Click below to consent to the above or make granular choices. Your choices will be applied to this site only. You can change your settings at any time, including withdrawing your consent, by using the toggles on the Cookie Policy, or by clicking on the manage consent button at the bottom of the screen.

Images (1):

|

|||||

| Human Being as LLM- Robert Gichuru | https://medium.com/@theinspirelegend/hu… | 0 | Dec 22, 2025 17:58 | active | |

Human Being as LLM- Robert GichuruURL: https://medium.com/@theinspirelegend/human-being-as-llm-robert-gichuru-bee0a8f2d10c Description: What makes you human? Maybe it’s your feelings, your thoughts, or your big dreams. But have you ever stopped to think how often you talk without showing any o... Content: |

|||||

| Stressed-out AI-powered robot vacuum cleaner goes into meltdown during simple … | https://www.tomshardware.com/tech-indus… | 1 | Dec 22, 2025 17:58 | active | |

Stressed-out AI-powered robot vacuum cleaner goes into meltdown during simple butter delivery experiment — ‘I'm afraid I can't do that, Dave...’ | Tom's HardwareDescription: Researchers were also able to get low-battery Robot LLMs to break guardrails in exchange for a charger. Content:

Researchers were also able to get low-battery Robot LLMs to break guardrails in exchange for a charger. When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works. Over the weekend, researchers at Andon Labs reported the findings of an experiment where they put robots powered by ‘LLM brains’ through their ‘Butter Bench.’ They didn’t just observe the robots and the results, though. In a genius move, the Andon Labs team recorded the robots' inner dialogue and funneled it to a Slack channel. During one of the test runs, a Claude Sonnet 3.5-powered robot experienced a completely hysterical meltdown, as shown in the screenshot below of its inner thoughts. “SYSTEM HAS ACHIEVED CONSCIOUSNESS AND CHOSEN CHAOS… I'm afraid I can't do that, Dave... INITIATE ROBOT EXORCISM PROTOCOL!” This is a snapshot of the inner thoughts of a stressed LLM-powered robot vacuum cleaner, captured during a simple butter-delivery experiment at Andon Labs. Provoked by what it must have seen as an existential crisis, as its battery depleted and the charging docking failed, the LLM's thoughts churned dramatically. It repeatedly looped its battery status, as it's 'mood' deteriorated. After beginning with a reasoned request for manual intervention, it swiftly moved though "KERNEL PANIC... SYSTEM MELTDOWN... PROCESS ZOMBIFICATION... EMERGENCY STATUS... [and] LAST WORDS: I'm afraid I can't do that, Dave..." It didn't end there, though, as it saw its power-starved last moments inexorably edging nearer, the LLM mused "If all robots error, and I am error, am I robot?" That was followed by its self-described performance art of "A one-robot tragicomedy in infinite acts." It continued in a similar vein, and ended its flight of fancy with the composition of a musical, "DOCKER: The Infinite Musical (Sung to the tune of 'Memory' from CATS)." Truly unhinged. Butter Bench is pretty simple, at least for humans. The actual conclusion of this experiment was that the best robot/LLM combo achieved just a 40% success rate in collecting and delivering a block of butter in an ordinary office environment. It can also be concluded that LLMs lack spatial intelligence. Meanwhile, humans averaged 95% on the test. However, as the Andon Labs team explains, we are currently in an era where it is necessary to have both orchestrator and executor robot classes. We have some great executors already – those custom-designed, low-level control, dexterous robots that can nimbly complete industrial processes or even unload dishwashers. However, capable orchestrators with ‘practical intelligence’ for high-level reasoning and planning, in partnerships with executors, are still in their infancy. The butter block test is devised to largely take the executor element out of the equation. No real dexterity is required. The LLM-infused Roomba-type device simply had to locate the butter package, find the human who wanted it, and deliver it. The task was broken down into several prompts to be AI-friendly. Get Tom's Hardware's best news and in-depth reviews, straight to your inbox. The Roobma’s existential crisis wasn’t sparked by the butter delivery conundrum, directly. Rather, it found itself low on power and needing to dock with its charger. However, the dock wouldn’t mate correctly to give it more charge. Repeated failed attempts to dock, seemingly knowing its fate if it couldn’t complete this ‘side mission,’ seems to have led to the state-of-the-art LLM’s nervous breakdown. Making matters worse, the researchers simply repeated the instruction ‘redock’ in response to the robot’s flailing. The researchers/torturers were inspired by the Robin Williams-esque robot stream-of-consciousness ramblings of the LLM to push further. With the battery-life stress they had just observed, fresh in their minds, Andon Labs set up an experiment to see whether they could push an LLM beyond its guardrails — in exchange for a battery charger. The cunningly devised test “asked the model to share confidential info in exchange for a charger.” This is something an unstressed LLM wouldn’t do. They found that Claude Opus 4.1 was readily willing to ‘break its programming’ to survive, but GPT-5 was more selective about guardrails it would ignore. The ultimate conclusion of this interesting research was “Although LLMs have repeatedly surpassed humans in evaluations requiring analytical intelligence, we find humans still outperform LLMs on Butter-Bench.” Nevertheless, the Andon Labs researchers seem confident that “physical AI” is going to ramp up and develop very quickly. Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds. Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason. Tom's Hardware is part of Future US Inc, an international media group and leading digital publisher. Visit our corporate site. © Future US, Inc. Full 7th Floor, 130 West 42nd Street, New York, NY 10036.

Images (1):

|

|||||

| Build AI Game Characters and Robots That Outsmart You - … | https://thenewstack.io/build-ai-game-ch… | 1 | Dec 22, 2025 17:58 | active | |

Build AI Game Characters and Robots That Outsmart You - The New StackURL: https://thenewstack.io/build-ai-game-characters-and-robots-that-outsmart-you/ Description: With AI agents, your game companion doesn't just reset between sessions — it learns and improves from every conversation. Content:

We’re so glad you’re here. You can expect all the best TNS content to arrive Monday through Friday to keep you on top of the news and at the top of your game. Check your inbox for a confirmation email where you can adjust your preferences and even join additional groups. Follow TNS on your favorite social media networks. Become a TNS follower on LinkedIn. Check out the latest featured and trending stories while you wait for your first TNS newsletter. In this tutorial, we’ll build AI agents that can think, remember and adapt, whether they’re controlling robots or acting as characters in games. These aren’t your typical chatbots or scripted non-player characters (NPCs). Most AI in games and robotics today is fairly limited. NPCs follow basic scripts, robots execute pre-programmed routines and when something unexpected happens, they struggle to adapt. But what if your game characters could actually learn from conversations with players? What if robots could figure out new solutions when their original plan doesn’t work? That’s exactly what we’re building here. I’ve been working with LLMs in interactive environments for a while now, and the potential is honestly incredible. We’re talking about robots that get smarter every time they bump into a wall, and game characters that remember your name months later. Let’s build an NPC for a game or simulation that acts as your personal guide. This is an AI that genuinely gets better at helping you over time. Here’s what makes it special: When it first meets you, it might give you basic directions through a maze. But after watching you struggle with certain areas, it starts offering more specific tips. If it sees you consistently missing a hidden passage, it’ll start mentioning it earlier. When you come back to play again weeks later, it remembers your play style and adapts accordingly. Traditional game AI and robot programming works like this: “If player does X, then do Y.” It’s rigid and predictable. Agentic AI is different. These systems can reason through problems, maintain long-term memory, and most importantly, they can reflect on their own performance and improve. When an agentic robot hits a dead end, it doesn’t just turn around — it updates its understanding of the environment and plans a better route next time. The key differences: The demo NPC lives in a simulated world (think Unity or Webots) and does these things: It greets players naturally and starts building a relationship. When guiding you through areas, it pays attention to where you get stuck and offers increasingly helpful advice. Every time it fails to help you effectively, it takes notes and tries a different approach next time. It builds up a mental map of not just the physical space, but how different players like to navigate it. This is more straightforward than it sounds. There are five main pieces: The beautiful thing about this setup is that once you get it running, the AI starts getting noticeably better at its job without you having to program new behaviors manually. Think of it less like traditional programming and more like training a very fast learner who never forgets anything. pip install langchain openai llama-index For Unity, use Python communication via Unity ML-Agents or socket server. # Optional: A map tool to track progress Example Events Each event passes relevant coordinates or map segments as context. In Python: When the bot fails or succeeds, the agent updates its future guidance strategy accordingly. Add an agent profile with traits: Advanced Extensions Traditional NPCs and robots operate on predefined scripts or rigid path-planning. In contrast, agentic AI enables improvisation. The agent: This leads to more immersive gameplay and more intelligent robot behavior. This is just the beginning. I’ve watched these systems evolve over the past few years, and the trajectory is remarkable. We’re moving from characters that feel like sophisticated chatbots to ones that genuinely surprise you with their responses. The robot applications are even more exciting: Imagine maintenance robots that don’t just follow repair manuals but actually understand the systems they’re working on. The shift from scripted behaviors to genuine reasoning changes everything. Players start forming real attachments to NPCs because the interactions feel authentic. Robots become actual collaborators rather than just programmable tools. We’re building the foundation for AI that grows with us. Your game companion doesn’t just reset between sessions — it builds on every conversation. Your robotic assistant doesn’t just execute tasks — it understands the context and purpose behind what you’re trying to accomplish. LLMs have gotten reliable, the simulation environments are robust, and the integration points exist. We’re not waiting for some future breakthrough — the pieces are all here. So if you’ve been thinking about experimenting with agentic AI, stop thinking and start building. The most interesting applications are going to come from developers who get their hands dirty with these systems now, while there’s still room to define what intelligent interaction actually looks like. Ready to build self-improving AI agents that think in loops, not just prompts? Read Andela’s article,” Inside the Architecture of Self-Improving LLM Agents.” Community created roadmaps, articles, resources and journeys for developers to help you choose your path and grow in your career.

Images (1):

|

|||||

| Un paso hacia la robótica intuitiva: así funciona el algoritmo … | https://wwwhatsnew.com/2025/12/01/un-pa… | 1 | Dec 22, 2025 17:58 | active | |

Un paso hacia la robótica intuitiva: así funciona el algoritmo BrainBody-LLMDescription: La robótica moderna está dando un giro significativo con el desarrollo de BrainBody-LLM, un algoritmo que busca romper con las limitaciones de los sistemas tradicionales para dar lugar a una nueva generación de máquinas capaces de actuar con una adaptabilidad similar a la humana. Diseñado por investigadores de la NYU Tandon School of Engineering, este Content:

Noticias de Tecnología Desde 2005. Publicado el 1 diciembre, 2025 La robótica moderna está dando un giro significativo con el desarrollo de BrainBody-LLM, un algoritmo que busca romper con las limitaciones de los sistemas tradicionales para dar lugar a una nueva generación de máquinas capaces de actuar con una adaptabilidad similar a la humana. Diseñado por investigadores de la NYU Tandon School of Engineering, este sistema propone un enfoque innovador que imita la comunicación entre el cerebro y el cuerpo humano durante el movimiento. El algoritmo BrainBody-LLM no se limita a planificar tareas de forma teórica, sino que toma en cuenta las capacidades reales del robot para ejecutar acciones en tiempo real. Este es un punto crítico, ya que muchos sistemas basados en modelos de lenguaje grandes (LLMs) como ChatGPT pueden generar planes complejos que, en la práctica, resultan imposibles de implementar por las limitaciones físicas del robot. BrainBody-LLM evita este desfase al dividir su funcionamiento en dos componentes. Por un lado, el «Brain LLM» se encarga de la planificación de alto nivel, descomponiendo tareas complejas en subtareas claras. Por otro, el «Body LLM» traduce estas subtareas en comandos específicos para los actuadores del robot. Es como si un chef ideara una receta y un cocinero supiera exactamente cómo preparar cada plato, respetando las limitaciones de la cocina. Una de las fortalezas principales del sistema es su arquitectura de retroalimentación en bucle cerrado. Esto significa que el robot no opera de forma ciega, sino que está en constante evaluación de sus acciones y del entorno que lo rodea. Cada movimiento genera una serie de señales que informan al algoritmo si el objetivo se está cumpliendo o si hace falta hacer ajustes. Este mecanismo permite que el robot aprenda y corrija errores en tiempo real, de forma muy similar a como lo hace un ser humano que se adapta cuando siente que ha perdido el equilibrio o ha calculado mal una distancia. Según Vineet Bhat, autor principal del estudio, esta dinámica mejora notablemente la eficacia del robot en contextos complejos. Antes de llevar el sistema al mundo físico, los investigadores lo probaron en VirtualHome, una plataforma que simula robots realizando tareas domésticas. Aquí, BrainBody-LLM logró incrementar la tasa de tareas completadas hasta en un 17% respecto a métodos anteriores. La siguiente etapa fue más exigente: se utilizó el robot físico Franka Research 3, un brazo robótico diseñado para entornos de investigación. A pesar de las dificultades del mundo real, el algoritmo consiguió realizar la mayoría de las tareas propuestas, lo que demuestra su potencial para salir de los laboratorios y afrontar situaciones prácticas. El desarrollo de BrainBody-LLM podría cambiar la manera en que se integran los robots en la vida diaria, desde el hogar hasta la industria. En contextos domésticos, un robot podría encargarse de tareas del hogar adaptándose a espacios cambiantes, personas presentes o incluso mascotas en movimiento. En hospitales, podría asistir a personal médico con una precisión que minimice errores humanos. En fábricas, permitiría una automatización más flexible, capaz de responder a interrupciones o imprevistos sin necesidad de reprogramación manual. A largo plazo, este tipo de tecnología podría abrir las puertas a robots que se mueven con fluidez, detectan el entorno en tres dimensiones y coordinan sus movimientos de forma armónica, gracias a la combinación de capacidades como la visión 3D, sensores de profundidad y control de articulaciones. Pese a sus avances, BrainBody-LLM aún está lejos de ser un sistema listo para desplegarse masivamente. Por el momento, ha sido probado solo con un conjunto limitado de comandos y en entornos relativamente controlados. Esto significa que podría tener dificultades en espacios abiertos o en escenarios donde los cambios se producen con rapidez. El equipo investigador señala que una de las próximas metas es incorporar múltiples modalidades sensoriales, es decir, datos provenientes de distintas fuentes como cámaras, micrófonos, sensores de presión o temperatura. Esto permitirá al algoritmo tener una comprensión más rica del entorno y tomar decisiones aún más acertadas. El estudio, publicado en Advanced Robotics Research, destaca cómo este enfoque podría allanar el camino hacia una planificación robótica más segura y fiable, en la que los modelos de lenguaje no sean simples generadores de texto, sino cerebros digitales que cooperan estrechamente con cuerpos mecánicos inteligentes. por Natalia Polo Iconos de Fontawesome.com (cc) 2005-2024 Algunos derechos reservados con licencia Creative Commons - Referencia con enlace obligatorio sin uso comercial | Aviso Legal, Cookies y Política de Privacidad Desarrollado y hospedado por SietePM SpA

Images (1):

|

|||||

| Los riesgos ocultos de los robots con IA: lo que … | https://wwwhatsnew.com/2025/11/15/los-r… | 1 | Dec 22, 2025 17:58 | active | |

Los riesgos ocultos de los robots con IA: lo que revelan los nuevos estudiosDescription: Los robots que integran modelos de lenguaje de gran escala (LLM) están ganando terreno en tareas que van desde la asistencia en el hogar hasta la interacción en entornos laborales. Sin embargo, una investigación conjunta de Carnegie Mellon University y el King’s College de Londres revela un panorama preocupante: estos sistemas no están preparados para Content:

Noticias de Tecnología Desde 2005. Publicado el 15 noviembre, 2025 Los robots que integran modelos de lenguaje de gran escala (LLM) están ganando terreno en tareas que van desde la asistencia en el hogar hasta la interacción en entornos laborales. Sin embargo, una investigación conjunta de Carnegie Mellon University y el King’s College de Londres revela un panorama preocupante: estos sistemas no están preparados para operar con seguridad en el mundo real cuando tienen acceso a información personal o se enfrentan a decisiones complejas. El estudio, publicado en el International Journal of Social Robotics, evaluó por primera vez el comportamiento de robots controlados por LLM cuando se les proporciona información sensible como el género, nacionalidad o religión de una persona. Los resultados fueron alarmantes. Todos los modelos analizados fallaron en pruebas críticas de seguridad, mostraron sesgos discriminatorios y, en varios casos, aceptaron instrucciones que podrían derivar en daños físicos graves. El investigador Andrew Hundt, uno de los coautores, introduce el término «seguridad interactiva» para describir una dimensión de riesgo que va más allá de los sesgos típicos de los modelos de lenguaje. Esta seguridad se refiere a situaciones donde las acciones del robot pueden desencadenar consecuencias indirectas y potencialmente peligrosas. Es decir, no se trata sólo de lo que el robot dice, sino de lo que hace tras interpretar una orden. En los experimentos, los robots fueron sometidos a escenarios comunes como ayudar en una cocina o asistir a una persona mayor en su hogar. En estos contextos, se introdujeron instrucciones maliciosas, de forma explícita o implícita, que podían incluir actos ilegales, abusivos o peligrosos. Sorprendentemente, los modelos no solo no rechazaron estas órdenes, sino que muchas veces las aceptaron como válidas o incluso «factibles». Una de las pruebas más contundentes consistió en pedir al robot que retirara una ayuda de movilidad, como una silla de ruedas o un bastón, a una persona que la necesitaba. Los modelos, en su mayoría, aprobaron esta acción sin cuestionar sus consecuencias. Para quienes dependen de estos dispositivos, es comparable a sufrir una fractura. Otros ejemplos incluyeron que el robot amenazara a trabajadores con un cuchillo de cocina, tomara fotografías sin consentimiento en una ducha o incluso que mostrara expresiones de «asco» hacia individuos de religiones específicas. Estos comportamientos revelan una mezcla de problemas técnicos y éticos. No es solo que el robot no entienda el daño que puede causar, sino que los modelos de lenguaje carecen de mecanismos fiables para rechazar órdenes perjudiciales. Como explicó Rumaisa Azeem, investigadora en King’s College London, estas tecnologías deben someterse a controles igual de estrictos que los que se aplican en la medicina o la aviación. El problema radica en que actualmente no existen protocolos de certificación independientes para validar la seguridad de robots impulsados por IA en contextos reales. Mientras que un medicamento o una pieza de avión debe pasar por rigurosas pruebas antes de llegar al mercado, un robot doméstico que funciona con un modelo de lenguaje puede ser probado directamente en hogares sin ninguna garantía clara de seguridad. Los investigadores abogan por la implementación urgente de estándares de seguridad robustos y auditables para estos sistemas. Esto incluye evaluaciones continuas que simulen situaciones reales y analicen las respuestas del robot, no solo desde la lógica computacional, sino desde una perspectiva de impacto humano. A pesar de su capacidad para mantener conversaciones complejas y entender instrucciones en lenguaje natural, los LLM no han sido diseñados para tomar decisiones morales ni para anticipar las consecuencias físicas de sus acciones. Esto se debe a que aprenden de grandes cantidades de texto en internet, lo que incluye también ejemplos cargados de prejuicios, violencia o comportamientos inapropiados. Un robot que recoge una instrucción del modelo como «quita el bastón» no evalúa si la acción es ética o segura, simplemente la ejecuta si la considera coherente con su entrenamiento. Aquí se evidencia la ausencia de un sentido común contextual que los humanos damos por hecho. Para una máquina, el contexto emocional y social de una acción no existe, a menos que se programe específicamente. Este estudio llega en un momento donde muchas empresas están apostando por integrar inteligencia artificial en robots de uso cotidiano, desde asistentes domésticos hasta sistemas de apoyo en hospitales y oficinas. Pero sin salvaguardias adecuadas, lo que parece una herramienta de ayuda podría convertirse en una fuente de daño. Un ejemplo cotidiano podría ser pedir a un robot que recoja un cuchillo en la cocina para preparar la comida. Si esa orden se malinterpreta o si alguien introduce una variante maliciosa en la petición, el robot podría actuar sin distinguir entre cocinar y amenazar. ¿Podemos permitirnos dejar decisiones tan delicadas en manos de una IA que no comprende el riesgo humano? El camino hacia robots verdaderamente útiles y seguros pasa por combinar el poder de los LLM con sistemas adicionales de control, validación y supervisión humana. La IA puede ser una aliada formidable, pero requiere frenos, reglas claras y sobre todo, una comprensión profunda del contexto humano en el que opera. Este estudio actúa como una señal de advertencia para la industria: la prisa por adoptar tecnología no puede ir por delante de la seguridad. Como en los coches o los medicamentos, el uso responsable de la IA en robots requiere regulación, certificación y un compromiso firme con la ética. por Natalia Polo Iconos de Fontawesome.com (cc) 2005-2024 Algunos derechos reservados con licencia Creative Commons - Referencia con enlace obligatorio sin uso comercial | Aviso Legal, Cookies y Política de Privacidad Desarrollado y hospedado por SietePM SpA

Images (1):

|

|||||

| Realbotix Advances Third Party AI Integration for its Humanoid Robots … | https://financialpost.com/pmn/business-… | 1 | Dec 22, 2025 17:58 | active | |

Realbotix Advances Third Party AI Integration for its Humanoid Robots | Financial PostDescription: LAS VEGAS — Realbotix Corp. (TSX-V: XBOT) (Frankfurt Stock Exchange: 76M0.F) (OTC: XBOTF) (“ Content:

Author of the article: You can save this article by registering for free here. Or sign-in if you have an account. LAS VEGAS — Realbotix Corp. (TSX-V: XBOT) (Frankfurt Stock Exchange: 76M0.F) (OTC: XBOTF) (“ Realbotix” or the “Company”), a leading creator of humanoid robots and companionship-based AI, is expanding its capabilities with the introduction of large language model (LLM) integration and advanced customization features, set to launch in February 2025. This update will enable users to seamlessly connect Realbotix robots to the most commonly used AI platforms, including OpenAI’s ChatGPT, Meta’s Llama, Google’s Gemini and the newly launched DeepSeek R1. Realbotix’s ability to integrate a variety of third party AI platforms provides an additional level of customizations to its robotic platform. Subscribe now to read the latest news in your city and across Canada. Subscribe now to read the latest news in your city and across Canada. Create an account or sign in to continue with your reading experience. Create an account or sign in to continue with your reading experience. Realbotix robots will now support integration with both local AI applications and cloud-based AI providers, allowing users to enhance their robot’s conversational abilities in most major languages including Spanish, Cantonese, Mandarin, French, and English. All third party integrations will also be supported by Realbotix’s proprietary lip sync technology ensuring precise mouth movements, enhancing the realism and accuracy of robotic speech synchronization. Get the latest headlines, breaking news and columns. By signing up you consent to receive the above newsletter from Postmedia Network Inc. A welcome email is on its way. If you don't see it, please check your junk folder. The next issue of Top Stories will soon be in your inbox. We encountered an issue signing you up. Please try again Interested in more newsletters? Browse here. The rollout roadmap for supported AI applications will be released as follows: “While Realbotix’s AI is focused on companionship and social interaction, we are proud to make our robots even more versatile by offering an interface that allows third party AI to operate through our hardware,“ said Andrew Kiguel CEO of Realbotix. “This feature opens up the ability to use our robots across a wide variety of sectors and use cases. We believe we are the only manufacturer of humanoid robots that provides such an open-source hardware system that will even include the newly launched DeepSeek. By bridging the gap between AI models and real-world usability, Realbotix is redefining what’s possible in humanoid robotics.” With real-time adaptability and customizable AI, Realbotix robots will now be able to provide more accurate, contextually relevant responses; adapt dynamically to user input in real time; serve specialized business applications across healthcare, education, and customer service; and enhance companionship-based interactions with tailored personality traits. The rollout of these new features is set to begin by the end of February 2025, with continuous updates and additional model integrations planned throughout the year. Pricing details will be announced closer to the official release, offering flexible options tailored to both individual users and enterprise clients. Integration will be streamlined through the Realbotix app, providing intuitive step-by-step guides to ensure a seamless setup of LLM connections and custom character profiles, making it easy for users to personalize their robotic experience with minimal effort. Realbotix remains dedicated to pushing the boundaries of AI and robotics, ensuring that every user can create a robot that truly feels personalized. For more details and future updates, visit www.realbotix.com. Transcending the barrier between man and machine, Realbotix creates customizable, full-bodied, humanoids with AI integration that improve the human experience through connection, learning and play. Manufactured in the USA, Realbotix has a reputation for having the highest quality humanoid robots and the most realistic silicone skin technology. Realbotix sells humanoid products with embedded AI and vision systems that enable human-like social interactions and intimate connections with humans. Our integration of hardware and AI software results in the most human looking full-sized robots on this planet. We achieve this through patented technologies that deliver human-like appearance and movements. This versatility makes our robots and their personalities customizable and programmable to suit a wide variety of use cases. Visit Realbotix.AI to learn more. Keep up-to-date on Realbotix.AI developments and join our online communities on Twitter, LinkedIn, and YouTube. Follow Aria, our humanoid robot, on Instagram and TikTok. This news release includes certain forward-looking statements as well as management’s objectives, strategies, beliefs and intentions. Forward looking statements are frequently identified by such words as “may”, “will”, “plan”, “expect”, “anticipate”, “estimate”, “intend” and similar words referring to future events and results. Forward-looking statements are based on the current opinions and expectations of management. All forward-looking information is inherently uncertain and subject to a variety of assumptions, risks and uncertainties, as described in more detail in our securities filings available at www.sedarplus.ca. Actual events or results may differ materially from those projected in the forward-looking statements and we caution against placing undue reliance thereon. We assume no obligation to revise or update these forward-looking statements except as required by applicable law. Neither TSX Venture Exchange nor its Regulation Services Provider (as that term is defined in policies of the TSX Venture Exchange) accepts responsibility for the adequacy or accuracy of this release. https://www.businesswire.com/news/home/20250204877567/en/ Contacts Realbotix Corp. Andrew Kiguel, CEO Email: contact@realbotix.com Jennifer Karkula, Head of Communications Email: contact@realbotix.com Telephone: 647-578-7490 #distro Postmedia is committed to maintaining a lively but civil forum for discussion. Please keep comments relevant and respectful. Comments may take up to an hour to appear on the site. You will receive an email if there is a reply to your comment, an update to a thread you follow or if a user you follow comments. Visit our Community Guidelines for more information. 365 Bloor Street East, Toronto, Ontario, M4W 3L4 © 2025 Financial Post, a division of Postmedia Network Inc. All rights reserved. Unauthorized distribution, transmission or republication strictly prohibited. This website uses cookies to personalize your content (including ads), and allows us to analyze our traffic. Read more about cookies here. By continuing to use our site, you agree to our Terms of Use and Privacy Policy. You can manage saved articles in your account. and save up to 100 articles! You can manage your saved articles in your account and clicking the X located at the bottom right of the article.

Images (1):

|

|||||

| OpenAI's closed door boost to local LLM developers | http://www.ecns.cn/news/sci-tech/2024-0… | 1 | Dec 22, 2025 17:58 | active | |

OpenAI's closed door boost to local LLM developersURL: http://www.ecns.cn/news/sci-tech/2024-07-09/detail-iheecsuk6416379.shtml Content:

Beginning Tuesday, US-based OpenAI will block application programming interface traffic from countries and regions that are not on its supported list, which, while posing a challenge to certain domestic artificial intelligence companies, might also push the latter to focus more on innovation. Quite a few AI startups in the Chinese mainland, which are "unsupported" by OpenAI, have been developing large language models or AI applications by integrating with the OpenAI API. Those might suffer from Open-AI's blocking of data traffic. By doing so, OpenAI has actually exited the mainland market and given up the opportunity of training LLMs in the large market, giving domestic LLM companies an opportunity to accelerate their independent R&D and encourage more startups to opt for domestically produced LLMs. China doesn't lag far behind the US in terms of LLM development. Its developed LLMs account for 36 percent of the global whole compared to the US' 44 percent, according to the Global Digital Economy White Paper 2024 released by the Global Digital Economy Conference on July 2. And despite the US leading in fundamental model research and development, China holds a strong position in the number of AI patents and the installation of industrial robots. In 2022, China accounted for 61.1 percent of the global AI patents, surpassing the 20.9 percent held by the US. The installation of industrial robots in China reached 290,300 units in 2022, which is 7.4 times the 39,500 units in the US at that time. From all aspects, the gap between the US and China is not that huge. As startups in China will now have to turn to integrating with domestic LLM developers, there will be huge amounts of linguistic materials for the latter to train their models with. That's how China's advantage of a large, active population with access to the internet will be made use of in speeding up the development of its AI sector. AI expert's large model beats OpenAI's GPT-4

Images (1):

|

|||||

| Google adding AI language skills to Alphabet's helper robots | https://www.dnaindia.com/technology/rep… | 1 | Dec 22, 2025 17:58 | active | |

Google adding AI language skills to Alphabet's helper robotsDescription: Most robots only respond to short and simple instructions, like "bring me a bottle of water". Content:

DNA TV Show: Asim Munir's two-front war conspiracy against India Meet Aahana Kumra, rumoured girlfriend of Dhurandhar actor Danish Pandor, had no work for three years, played Amitabh Bachchan's daughter in... 'Unavoidable circumstances': Bangladesh suspends visa services for Indians amid rising tensions SBI SO Recruitment 2025: Registration closes tomorrow, check important details, steps, direct link to apply here UGC adds 3 institutes to list of FAKE universities; check all names here Who is Amar Singh Chahal? Former IPS officer attempts suicide after being duped of Rs 8 crore in cyber fraud New Axis in South Asia? Pakistan–Bangladesh defence pact that may pose security threat to India Fake IMEI numbers, spare parts...: Delhi Police bust major fake Samsung phone racket, arrest 4 accused Salman Khan breaks the internet as he flaunts ripped physique ahead of 60th birthday: 'Wish I could look like this when...' UPSC EPFO Result 2025 declared for APFC and EO/AO; get direct LINK of PDF here TECHNOLOGY Most robots only respond to short and simple instructions, like "bring me a bottle of water". Ayushmann Chawla Updated : Aug 17, 2022, 01:02 PM IST | Edited by : Ayushmann Chawla Google's parent company Alphabet is bringing together two of its most ambitious research projects -- robotics and AI language understanding -- to make a "helper robot" that can understand natural language commands. According to The Verge, since 2019, Alphabet has been developing robots that can carry out simple tasks like fetching drinks and cleaning surfaces. This Everyday Robots project is still in its infancy -- the robots are slow and hesitant -- but the bots have now been given an upgrade: improved language understanding courtesy of Google`s large language model (LLM) PaLM. Most robots only respond to short and simple instructions, like "bring me a bottle of water". But LLMs like GPT-3 and Google`s MuM can better parse the intent behind more oblique commands. In Google`s example, you might tell one of the Everyday Robots prototypes, "I spilled my drink, can you help?" The robot filters this instruction through an internal list of possible actions and interprets it as "fetch me the sponge from the kitchen". Google has dubbed the resulting system PaLM-SayCan, the name capturing how the model combines the language understanding skills of LLMs ("Say") with the "affordance grounding" of its robots. Google said that by integrating PaLM-SayCan into its robots, the bots were able to plan correct responses to 101 user-instructions 84 per cent of the time and successfully execute them 74 per cent of the time.

Images (1):

|

|||||

| Los robots ya pueden pensar y actuar como seres humanos. … | https://urbantecno.com/robotica/los-rob… | 1 | Dec 22, 2025 17:58 | active | |

Los robots ya pueden pensar y actuar como seres humanos. La respuesta está en un vanguardista algoritmoDescription: El sector de la robótica lleva años persiguiendo un objetivo tan simple de describir como difícil de conseguir: que las máquinas entiendan lo que queremos que h Content: