AUTOMATION HISTORY

856

Total Articles Scraped

1474

Total Images Extracted

Scraped Articles

New Automation| Action | Title | URL | Images | Scraped At | Status |

|---|---|---|---|---|---|

| Research: Human-like robots may be perceived as having mental states … | https://theprint.in/health/research-hum… | 1 | Jan 05, 2026 16:00 | active | |

Research: Human-like robots may be perceived as having mental states – ThePrint – ANIFeedDescription: Washington [US], July 10 (ANI): According to new research, when robots appear to engage with people and display human-like emotions, people may perceive them as capable of ‘thinking,’ or acting on their own beliefs and desires rather than their programs. “The relationship between anthropomorphic shape, human-like behaviour and the tendency to attribute independent thought and […] Content:

Washington [US], July 10 (ANI): According to new research, when robots appear to engage with people and display human-like emotions, people may perceive them as capable of ‘thinking,’ or acting on their own beliefs and desires rather than their programs. “The relationship between anthropomorphic shape, human-like behaviour and the tendency to attribute independent thought and intentional behavior to robots is yet to be understood,” said study author Agnieszka Wykowska, PhD, a principal investigator at the Italian Institute of Technology. “As artificial intelligence increasingly becomes a part of our lives, it is important to understand how interacting with a robot that displays human-like behaviors might induce higher likelihood of attribution of intentional agency to the robot.” Show Full Article Washington [US], July 10 (ANI): According to new research, when robots appear to engage with people and display human-like emotions, people may perceive them as capable of ‘thinking,’ or acting on their own beliefs and desires rather than their programs. “The relationship between anthropomorphic shape, human-like behaviour and the tendency to attribute independent thought and intentional behavior to robots is yet to be understood,” said study author Agnieszka Wykowska, PhD, a principal investigator at the Italian Institute of Technology. “As artificial intelligence increasingly becomes a part of our lives, it is important to understand how interacting with a robot that displays human-like behaviors might induce higher likelihood of attribution of intentional agency to the robot.” Show Full Article “The relationship between anthropomorphic shape, human-like behaviour and the tendency to attribute independent thought and intentional behavior to robots is yet to be understood,” said study author Agnieszka Wykowska, PhD, a principal investigator at the Italian Institute of Technology. “As artificial intelligence increasingly becomes a part of our lives, it is important to understand how interacting with a robot that displays human-like behaviors might induce higher likelihood of attribution of intentional agency to the robot.” The research was published in the journal Technology, Mind, and Behavior. Across three experiments involving 119 participants, researchers examined how individuals would perceive a human-like robot, the iCub, after socializing with it and watching videos together. Before and after interacting with the robot, participants completed a questionnaire that showed them pictures of the robot in different situations and asked them to choose whether the robot’s motivation in each situation was mechanical or intentional. For example, participants viewed three photos depicting the robot selecting a tool and then chose whether the robot “grasped the closest object” or “was fascinated by tool use.” In the first two experiments, the researchers remotely controlled iCub’s actions so it would behave gregariously, greeting participants, introducing itself and asking for the participants’ names. Cameras in the robot’s eyes were also able to recognize participants’ faces and maintain eye contact. The participants then watched three short documentary videos with the robot, which was programmed to respond to the videos with sounds and facial expressions of sadness, awe or happiness. In the third experiment, the researchers programmed iCub to behave more like a machine while it watched videos with the participants. The cameras in the robot’s eyes were deactivated so it could not maintain eye contact and it only spoke recorded sentences to the participants about the calibration process it was undergoing. All emotional reactions to the videos were replaced with a “beep” and repetitive movements of its torso, head and neck. The researchers found that participants who watched videos with the human-like robot were more likely to rate the robot’s actions as intentional, rather than programmed, while those who only interacted with the machine-like robot were not. This shows that mere exposure to a human-like robot is not enough to make people believe it is capable of thoughts and emotions. It is human-like behavior that might be crucial for being perceived as an intentional agent. According to Wykowska, these findings show that people might be more likely to believe artificial intelligence is capable of independent thought when it creates the impression that it can behave just like humans. This could inform the design of social robots of the future, she said. “Social bonding with robots might be beneficial in some contexts, like with socially assistive robots. For example, in elderly care, social bonding with robots might induce a higher degree of compliance with respect to following recommendations regarding taking medication,” Wykowska said. “Determining contexts in which social bonding and attribution of intentionality is beneficial for the well-being of humans is the next step of research in this area.” (ANI) This report is auto-generated from ANI news service. ThePrint holds no responsibility for its content. The research was published in the journal Technology, Mind, and Behavior. Across three experiments involving 119 participants, researchers examined how individuals would perceive a human-like robot, the iCub, after socializing with it and watching videos together. Before and after interacting with the robot, participants completed a questionnaire that showed them pictures of the robot in different situations and asked them to choose whether the robot’s motivation in each situation was mechanical or intentional. For example, participants viewed three photos depicting the robot selecting a tool and then chose whether the robot “grasped the closest object” or “was fascinated by tool use.” In the first two experiments, the researchers remotely controlled iCub’s actions so it would behave gregariously, greeting participants, introducing itself and asking for the participants’ names. Cameras in the robot’s eyes were also able to recognize participants’ faces and maintain eye contact. The participants then watched three short documentary videos with the robot, which was programmed to respond to the videos with sounds and facial expressions of sadness, awe or happiness. In the third experiment, the researchers programmed iCub to behave more like a machine while it watched videos with the participants. The cameras in the robot’s eyes were deactivated so it could not maintain eye contact and it only spoke recorded sentences to the participants about the calibration process it was undergoing. All emotional reactions to the videos were replaced with a “beep” and repetitive movements of its torso, head and neck. The researchers found that participants who watched videos with the human-like robot were more likely to rate the robot’s actions as intentional, rather than programmed, while those who only interacted with the machine-like robot were not. This shows that mere exposure to a human-like robot is not enough to make people believe it is capable of thoughts and emotions. It is human-like behavior that might be crucial for being perceived as an intentional agent. According to Wykowska, these findings show that people might be more likely to believe artificial intelligence is capable of independent thought when it creates the impression that it can behave just like humans. This could inform the design of social robots of the future, she said. “Social bonding with robots might be beneficial in some contexts, like with socially assistive robots. For example, in elderly care, social bonding with robots might induce a higher degree of compliance with respect to following recommendations regarding taking medication,” Wykowska said. “Determining contexts in which social bonding and attribution of intentionality is beneficial for the well-being of humans is the next step of research in this area.” (ANI) This report is auto-generated from ANI news service. ThePrint holds no responsibility for its content. Across three experiments involving 119 participants, researchers examined how individuals would perceive a human-like robot, the iCub, after socializing with it and watching videos together. Before and after interacting with the robot, participants completed a questionnaire that showed them pictures of the robot in different situations and asked them to choose whether the robot’s motivation in each situation was mechanical or intentional. For example, participants viewed three photos depicting the robot selecting a tool and then chose whether the robot “grasped the closest object” or “was fascinated by tool use.” In the first two experiments, the researchers remotely controlled iCub’s actions so it would behave gregariously, greeting participants, introducing itself and asking for the participants’ names. Cameras in the robot’s eyes were also able to recognize participants’ faces and maintain eye contact. The participants then watched three short documentary videos with the robot, which was programmed to respond to the videos with sounds and facial expressions of sadness, awe or happiness. In the third experiment, the researchers programmed iCub to behave more like a machine while it watched videos with the participants. The cameras in the robot’s eyes were deactivated so it could not maintain eye contact and it only spoke recorded sentences to the participants about the calibration process it was undergoing. All emotional reactions to the videos were replaced with a “beep” and repetitive movements of its torso, head and neck. The researchers found that participants who watched videos with the human-like robot were more likely to rate the robot’s actions as intentional, rather than programmed, while those who only interacted with the machine-like robot were not. This shows that mere exposure to a human-like robot is not enough to make people believe it is capable of thoughts and emotions. It is human-like behavior that might be crucial for being perceived as an intentional agent. According to Wykowska, these findings show that people might be more likely to believe artificial intelligence is capable of independent thought when it creates the impression that it can behave just like humans. This could inform the design of social robots of the future, she said. “Social bonding with robots might be beneficial in some contexts, like with socially assistive robots. For example, in elderly care, social bonding with robots might induce a higher degree of compliance with respect to following recommendations regarding taking medication,” Wykowska said. “Determining contexts in which social bonding and attribution of intentionality is beneficial for the well-being of humans is the next step of research in this area.” (ANI) This report is auto-generated from ANI news service. ThePrint holds no responsibility for its content. In the first two experiments, the researchers remotely controlled iCub’s actions so it would behave gregariously, greeting participants, introducing itself and asking for the participants’ names. Cameras in the robot’s eyes were also able to recognize participants’ faces and maintain eye contact. The participants then watched three short documentary videos with the robot, which was programmed to respond to the videos with sounds and facial expressions of sadness, awe or happiness. In the third experiment, the researchers programmed iCub to behave more like a machine while it watched videos with the participants. The cameras in the robot’s eyes were deactivated so it could not maintain eye contact and it only spoke recorded sentences to the participants about the calibration process it was undergoing. All emotional reactions to the videos were replaced with a “beep” and repetitive movements of its torso, head and neck. The researchers found that participants who watched videos with the human-like robot were more likely to rate the robot’s actions as intentional, rather than programmed, while those who only interacted with the machine-like robot were not. This shows that mere exposure to a human-like robot is not enough to make people believe it is capable of thoughts and emotions. It is human-like behavior that might be crucial for being perceived as an intentional agent. According to Wykowska, these findings show that people might be more likely to believe artificial intelligence is capable of independent thought when it creates the impression that it can behave just like humans. This could inform the design of social robots of the future, she said. “Social bonding with robots might be beneficial in some contexts, like with socially assistive robots. For example, in elderly care, social bonding with robots might induce a higher degree of compliance with respect to following recommendations regarding taking medication,” Wykowska said. “Determining contexts in which social bonding and attribution of intentionality is beneficial for the well-being of humans is the next step of research in this area.” (ANI) This report is auto-generated from ANI news service. ThePrint holds no responsibility for its content. In the third experiment, the researchers programmed iCub to behave more like a machine while it watched videos with the participants. The cameras in the robot’s eyes were deactivated so it could not maintain eye contact and it only spoke recorded sentences to the participants about the calibration process it was undergoing. All emotional reactions to the videos were replaced with a “beep” and repetitive movements of its torso, head and neck. The researchers found that participants who watched videos with the human-like robot were more likely to rate the robot’s actions as intentional, rather than programmed, while those who only interacted with the machine-like robot were not. This shows that mere exposure to a human-like robot is not enough to make people believe it is capable of thoughts and emotions. It is human-like behavior that might be crucial for being perceived as an intentional agent. According to Wykowska, these findings show that people might be more likely to believe artificial intelligence is capable of independent thought when it creates the impression that it can behave just like humans. This could inform the design of social robots of the future, she said. “Social bonding with robots might be beneficial in some contexts, like with socially assistive robots. For example, in elderly care, social bonding with robots might induce a higher degree of compliance with respect to following recommendations regarding taking medication,” Wykowska said. “Determining contexts in which social bonding and attribution of intentionality is beneficial for the well-being of humans is the next step of research in this area.” (ANI) This report is auto-generated from ANI news service. ThePrint holds no responsibility for its content. The researchers found that participants who watched videos with the human-like robot were more likely to rate the robot’s actions as intentional, rather than programmed, while those who only interacted with the machine-like robot were not. This shows that mere exposure to a human-like robot is not enough to make people believe it is capable of thoughts and emotions. It is human-like behavior that might be crucial for being perceived as an intentional agent. According to Wykowska, these findings show that people might be more likely to believe artificial intelligence is capable of independent thought when it creates the impression that it can behave just like humans. This could inform the design of social robots of the future, she said. “Social bonding with robots might be beneficial in some contexts, like with socially assistive robots. For example, in elderly care, social bonding with robots might induce a higher degree of compliance with respect to following recommendations regarding taking medication,” Wykowska said. “Determining contexts in which social bonding and attribution of intentionality is beneficial for the well-being of humans is the next step of research in this area.” (ANI) This report is auto-generated from ANI news service. ThePrint holds no responsibility for its content. According to Wykowska, these findings show that people might be more likely to believe artificial intelligence is capable of independent thought when it creates the impression that it can behave just like humans. This could inform the design of social robots of the future, she said. “Social bonding with robots might be beneficial in some contexts, like with socially assistive robots. For example, in elderly care, social bonding with robots might induce a higher degree of compliance with respect to following recommendations regarding taking medication,” Wykowska said. “Determining contexts in which social bonding and attribution of intentionality is beneficial for the well-being of humans is the next step of research in this area.” (ANI) This report is auto-generated from ANI news service. ThePrint holds no responsibility for its content. “Social bonding with robots might be beneficial in some contexts, like with socially assistive robots. For example, in elderly care, social bonding with robots might induce a higher degree of compliance with respect to following recommendations regarding taking medication,” Wykowska said. “Determining contexts in which social bonding and attribution of intentionality is beneficial for the well-being of humans is the next step of research in this area.” (ANI) This report is auto-generated from ANI news service. ThePrint holds no responsibility for its content. This report is auto-generated from ANI news service. ThePrint holds no responsibility for its content. Subscribe to our channels on YouTube, Telegram & WhatsApp Support Our Journalism India needs fair, non-hyphenated and questioning journalism, packed with on-ground reporting. ThePrint – with exceptional reporters, columnists and editors – is doing just that. Sustaining this needs support from wonderful readers like you. Whether you live in India or overseas, you can take a paid subscription by clicking here. Support Our Journalism Save my name, email, and website in this browser for the next time I comment. Δ Required fields are marked * Name * Email * Δ Copyright © 2025 Printline Media Pvt. Ltd. All rights reserved.

Images (1):

|

|||||

| Neuromorphic Artificial Skin Mimics Human Touch for Efficient Robots | https://www.webpronews.com/neuromorphic… | 0 | Jan 05, 2026 16:00 | active | |

Neuromorphic Artificial Skin Mimics Human Touch for Efficient RobotsURL: https://www.webpronews.com/neuromorphic-artificial-skin-mimics-human-touch-for-efficient-robots/ Description: Keywords Content: |

|||||

| Algolux Closes $18.4 Million Series B Round For Robust Computer … | https://www.thestreet.com/press-release… | 0 | Jan 05, 2026 08:00 | active | |

Algolux Closes $18.4 Million Series B Round For Robust Computer VisionDescription: New investment will serve to accelerate market adoption of Algolux's robust and scalable computer vision and image optimization solutions MONTREAL, July 12, Content: |

|||||

| UniX AI pushes humanoid robots beyond demos and into service | https://interestingengineering.com/ai-r… | 1 | Jan 05, 2026 00:00 | active | |

UniX AI pushes humanoid robots beyond demos and into serviceURL: https://interestingengineering.com/ai-robotics/unix-ai-wanda-humanoid-robots-real-world-deployment Description: UniX AI readies Wanda 2.0 and 3.0 humanoid robots for real-world service work as embodied AI moves toward scale. Content:

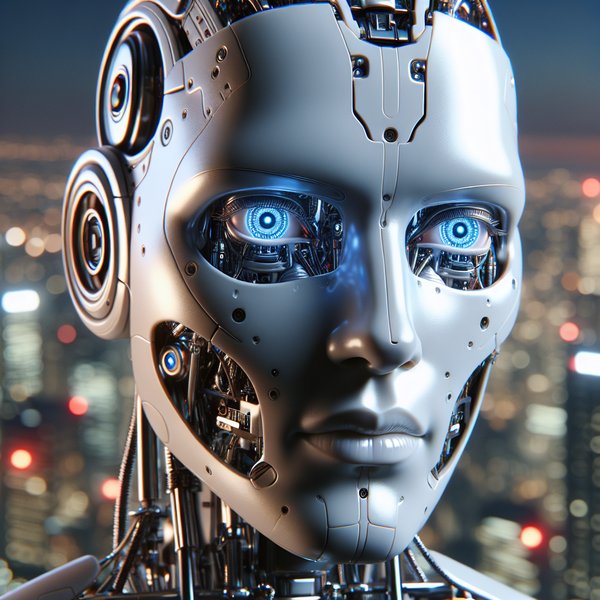

From daily news and career tips to monthly insights on AI, sustainability, software, and more—pick what matters and get it in your inbox. Explore The Most Powerful Tech Event in the World with Interesting Engineering. Stick with us as we share the highlights of CES week! Access expert insights, exclusive content, and a deeper dive into engineering and innovation. Engineering-inspired textiles, mugs, hats, and thoughtful gifts We connect top engineering talent with the world's most innovative companies. We empower professionals with advanced engineering and tech education to grow careers. We recognize outstanding achievements in engineering, innovation, and technology. All Rights Reserved, IE Media, Inc. Follow Us On Access expert insights, exclusive content, and a deeper dive into engineering and innovation. Engineering-inspired textiles, mugs, hats, and thoughtful gifts We connect top engineering talent with the world's most innovative companies We empower professionals with advanced engineering and tech education to grow careers. We recognize outstanding achievements in engineering, innovation, and technology. All Rights Reserved, IE Media, Inc. Wanda 2.0 and 3.0 are designed for repeatable service tasks, signaling a shift from humanoid demos to deployment. UniX AI is readying its next-generation humanoid robots, Wanda 2.0 and Wanda 3.0, as commercially deployable systems designed for real-world service work. Built to move beyond controlled demonstrations, targeting environments where reliability, repetition, and adaptability determine whether humanoids can function at scale, the full-size humanoid robot will debut at CES 2026. Wanda 2.0, UniX AI’s second-generation full-size humanoid robot, is equipped with 23 high-degree-of-freedom joints, an 8-DoF bionic arm, and adaptive intelligent grippers.According to the company, this configuration allows the robot to perform dexterous manipulation, autonomous perception, and coordinated task execution in complex, changing environments. Rather than positioning the Wanda series as a showcase of isolated capabilities, UniX AI is framing the robots as general-purpose service systems that can learn workflows, adapt to new routines, and operate continuously across different settings. The approach reflects a shift in humanoid robotics, where success depends less on novelty and more on operational consistency. The company says both Wanda 2.0 and Wanda 3.0 are already structured for scale, supported by mature engineering processes and supply chains. UniX AI claims it has reached a stable delivery capacity of 100 units per month, with deployments planned across hotels, property management, security, retail, and research and education. To underline practical readiness, UniX AI will demonstrate the robots performing everyday service tasks in simulated environments, including drink preparation, dishwashing, clothes organization, bed-making, amenity replenishment, and waste sorting. The demonstrations are expected to take place during a major consumer electronics show in Las Vegas, where the company plans to formally unveil the robots. In one scenario, Wanda 2.0 prepares zero-alcohol beverages ordered through an app, identifying barware, controlling liquid proportions, and pouring steadily. Other setups replicate household and hotel workflows, emphasizing repeatable, high-frequency tasks that dominate service operations. Powering the Wanda series is UniX AI’s in-house technology stack, which combines multimodal semantic keypoints with UniFlex for imitation learning, UniTouch for tactile perception, and UniCortex for long-sequence task planning. The company says this architecture enables robots to perceive environments, plan multi-step actions, and execute tasks autonomously without extensive reprogramming. UniX AI argues that such capabilities signal a broader inflection point for embodied AI, as humanoid robots move closer to commercial validation. “The embodied AI industry is moving from the demonstration stage toward the validation and scale-up stage,” said UniX AI Founder and CEO Fengyu Yang. “The future of embodied intelligence belongs to companies that unify algorithmic capability, hardware capability, and scenario capability.” Yang said UniX AI plans to continue advancing productization and global expansion following mass production in 2025. “Chinese embodied intelligence companies are no longer merely providers of cost advantages, but have evolved into entities capable of exporting mature products and application models to global markets.” By anchoring its reveal in large-scale service scenarios rather than speculative use cases, UniX AI is positioning the Wanda series as part of the next wave of humanoid robots built for deployment, not just display. With over a decade-long career in journalism, Neetika Walter has worked with The Economic Times, ANI, and Hindustan Times, covering politics, business, technology, and the clean energy sector. Passionate about contemporary culture, books, poetry, and storytelling, she brings depth and insight to her writing. When she isn’t chasing stories, she’s likely lost in a book or enjoying the company of her dogs. Premium Follow

Images (1):

|

|||||

| KEENON Debuts First Bipedal Humanoid Service Robot at WAIC, Showcasing … | https://www.manilatimes.net/2025/07/26/… | 0 | Jan 04, 2026 16:00 | active | |

KEENON Debuts First Bipedal Humanoid Service Robot at WAIC, Showcasing Role-Specific Embodied AI SolutionsDescription: SHANGHAI, July 26, 2025 /PRNewswire/ -- The world premiere of KEENON Robotics' bipedal humanoid service robot, XMAN-F1, takes center stage at the World Artifici... Content: |

|||||

| KEENON Debuts First Bipedal Humanoid Service Robot at WAIC, Showcasing … | https://moneycompass.com.my/keenon-debu… | 1 | Jan 04, 2026 16:00 | active | |

KEENON Debuts First Bipedal Humanoid Service Robot at WAIC, Showcasing Role-Specific Embodied AI Solutions - Money CompassDescription: Money Compass is one of the credible Chinese and English financial media in Malaysia with strong influence in Malaysia’s financial industry. As the winner of the SME Award in Malaysia for 5 consecutive years, we persistently propel the financial industry towards a mutually beneficial framework. Since 2004, with the dedication to advocating the public to practice financial planning in everyday life, Money Compass has accumulated a vast connection in ASEAN financial industries and garnered government agencies and corporate resources. At present, Money Compass is adjusting its pace to transform into Money Compass 2.0. Consolidating the existing connections and network, Money Compass Integrated Media Platform is founded, which is well grounded in Malaysia whilst serving the ASEAN region. The mission of the new Money Compass Integrated Media Platform is to become the financial freedom gateway to assist internet users enhance financial intelligence, create wealth opportunities and achieve financial freedom for everyone! Content:

SHANGHAI, July 26, 2025 /PRNewswire/ — The world premiere of KEENON Robotics’ bipedal humanoid service robot, XMAN-F1, takes center stage at the World Artificial Intelligence Conference (WAIC) 2025 in Shanghai from July 26 to 29, where the pioneer in embodied intelligence showcases its latest innovations on the global stage for breakthrough AI advancements. Transforming its showground into an Embodied Service Experience Hub, KEENON immerses visitors in three interactive scenarios—medical station, lounge bar, and performance space—highlighting how embodied AI solution is actively reshaping future lifestyles and industrial ecosystems. At the event, XMAN-F1 emerges as the core interactive demonstration, showcasing human-like mobility and precision in service tasks across diverse scenarios. From preparing popcorn to mixing personalized chilled beverages such as Sprite or Coke with adjustable ice levels, the robot demonstrates remarkable environmental adaptability and task execution. Scheduled stage appearances feature XMAN-F1 autonomously delivering digital presentations and product demos, powered by multimodal interaction and large language model technologies. Its fluid movements and naturalistic gestures establish it as the primary focus of attention, with visitors gathering to witness its engagement live. The demonstration spotlights further multi-robot collaboration in specialized environments. At the medical station, the humanoid XMAN-F1 partners with logistics robot M104 to create a closed-loop smart healthcare solution. The bar area features a highlight collaboration with Johnnie Walker Blue Label—the world’s leading premium whisky—where robotic bartenders work alongside delivery robot T10 to craft and serve bespoke beverages. The seamless multi-robot integration not only enhances operational efficiency but signals the dawn of robotic system interoperability, moving far beyond single-task automation. According to IDC’s latest report, KEENON leads the commercial service robot sector with 22.7% of global shipments and holds a definitive 40.4% share in food delivery robotics. At WAIC 2025, the company reinforces its market leadership while presenting its ecosystem-based strategy for cross-scenario embodied intelligence solutions. Looking ahead, KEENON will continue driving innovation in embodied intelligence, combining cutting-edge R&D and global partnerships to unlock the full potential of ‘Robotics+’ applications worldwide. SOURCE KEENON Robotics Your email address will not be published. Required fields are marked * Comment * Name * Email * Website Save my name, email, and website in this browser for the next time I comment. Copyright © 2024 Money Compass Media (M) Sdn Bhd. All Rights Reserved Login to your account below Remember Me Please enter your username or email address to reset your password. Copyright © 2024 Money Compass Media (M) Sdn Bhd. All Rights Reserved

Images (1):

|

|||||

| KEENON Debuts First Bipedal Humanoid Service Robot at WAIC, Showcasing … | https://bubblear.com/keenon-debuts-firs… | 1 | Jan 04, 2026 16:00 | active | |

KEENON Debuts First Bipedal Humanoid Service Robot at WAIC, Showcasing Role-Specific Embodied AI Solutions – The BubbleContent:

SHANGHAI, July 26, 2025 /PRNewswire/ — The world premiere of KEENON Robotics’ bipedal humanoid service robot, XMAN-F1, takes center stage at the World Artificial Intelligence Conference (WAIC) 2025 in Shanghai from July 26 to 29, where the pioneer in embodied intelligence showcases its latest innovations on the global stage for breakthrough AI advancements. Transforming its showground into an Embodied Service Experience Hub, KEENON immerses visitors in three interactive scenarios—medical station, lounge bar, and performance space—highlighting how embodied AI solution is actively reshaping future lifestyles and industrial ecosystems. At the event, XMAN-F1 emerges as the core interactive demonstration, showcasing human-like mobility and precision in service tasks across diverse scenarios. From preparing popcorn to mixing personalized chilled beverages such as Sprite or Coke with adjustable ice levels, the robot demonstrates remarkable environmental adaptability and task execution. Scheduled stage appearances feature XMAN-F1 autonomously delivering digital presentations and product demos, powered by multimodal interaction and large language model technologies. Its fluid movements and naturalistic gestures establish it as the primary focus of attention, with visitors gathering to witness its engagement live. The demonstration spotlights further multi-robot collaboration in specialized environments. At the medical station, the humanoid XMAN-F1 partners with logistics robot M104 to create a closed-loop smart healthcare solution. The bar area features a highlight collaboration with Johnnie Walker Blue Label—the world’s leading premium whisky—where robotic bartenders work alongside delivery robot T10 to craft and serve bespoke beverages. The seamless multi-robot integration not only enhances operational efficiency but signals the dawn of robotic system interoperability, moving far beyond single-task automation. According to IDC’s latest report, KEENON leads the commercial service robot sector with 22.7% of global shipments and holds a definitive 40.4% share in food delivery robotics. At WAIC 2025, the company reinforces its market leadership while presenting its ecosystem-based strategy for cross-scenario embodied intelligence solutions. Looking ahead, KEENON will continue driving innovation in embodied intelligence, combining cutting-edge R&D and global partnerships to unlock the full potential of ‘Robotics+’ applications worldwide. View original content to download multimedia:https://www.prnewswire.com/news-releases/keenon-debuts-first-bipedal-humanoid-service-robot-at-waic-showcasing-role-specific-embodied-ai-solutions-302514398.html SOURCE KEENON Robotics Disclaimer: The above press release comes to you under an arrangement with PR Newswire. Bubblear.com takes no editorial responsibility for the same. © 2026 - The Bubble. All Rights Reserved.

Images (1):

|

|||||

| Chinese expert calls for world models and safety standards for … | https://biztoc.com/x/faa09dff872904e5?r… | 0 | Jan 04, 2026 16:00 | active | |

Chinese expert calls for world models and safety standards for embodied AIURL: https://biztoc.com/x/faa09dff872904e5?ref=ff Description: Andrew Yao Chi-chih, a world-renowned Chinese computer scientist, said embodied artificial intelligence still lacks key foundations, stressing the need for… Content: |

|||||

| The Quiet Architects of Embodied AI | https://medium.com/@noa.schachtel/the-q… | 0 | Jan 04, 2026 16:00 | active | |

The Quiet Architects of Embodied AIURL: https://medium.com/@noa.schachtel/the-quiet-architects-of-embodied-ai-fcec0f8617d6 Description: The Quiet Architects of Embodied AI Before a robot learns to pour a cup of coffee, someone watches it fail. Frame by frame, they mark where the metal hand hesit... Content: |

|||||

| BYD Globally Recruits Talent in the Field of Embodied AI … | https://pandaily.com/byd-globally-recru… | 1 | Jan 04, 2026 16:00 | active | |

BYD Globally Recruits Talent in the Field of Embodied AI - PandailyURL: https://pandaily.com/byd-globally-recruits-talent-in-the-field-of-embodied-ai/ Description: BYD is also going to build humanoid robots and is recruiting talents in the field of embodied intelligence globally. Content:

Want to read in a language you're more familiar with? BYD is also going to build humanoid robots and is recruiting talents in the field of embodied intelligence globally. A new giant has entered the field of humanoid robots. On December 13th, the 'BYD Recruitment' official account released information about recruiting for the 25th Embodied Intelligence Research Team. The positions include senior algorithm engineers, senior structural engineers, senior simulation engineers, etc., with research directions involving humanoid robots, bipedal robots and other dimensions. The target audience is master's and doctoral graduates from global universities in 2025. The team introduction shows that BYD's embodied intelligent research team is conducting customized development of various types of robots and systems by deeply exploring the application scenarios demand at a company scale, continuously enhancing the perception and decision-making capabilities of robots, and promoting the accelerated landing applications of intelligence in the industrial field. Currently, the team has developed products such as industrial robots, intelligent collaborative robots, intelligent mobile robots, humanoid robots, etc. At the BYD's 30th anniversary celebration and unveiling ceremony for its 10 millionth new energy vehicle last month, Wang Chuanfu, Chairman and President of BYD Company Limited announced that they will invest 100 billion yuan in developing artificial intelligence combined with automotive smart technology to achieve comprehensive vehicle intelligence advancement. SEE ALSO: BYD Will Invest 100 Billion Yuan in Developing AI and Smart Technology for Cars The domestic humanoid robot company 'UBTECH' received investment from BYD in the early stages of its establishment. In October this year, UBTECH released its new generation industrial humanoid robot Walker S1 for training at BYD and other automotive factories. Related posts coming soon... Pandaily is a tech media based in Beijing. Our mission is to deliver premium content and contextual insights on China's technology scene to the worldwide tech community. © 2017 - 2026 Pandaily. All rights reserved.

Images (1):

|

|||||

| Embodied Intelligence: The PRC’s Whole-of-Nation Push into Robotics | https://jamestown.org/program/embodied-… | 0 | Jan 04, 2026 16:00 | active | |

Embodied Intelligence: The PRC’s Whole-of-Nation Push into RoboticsURL: https://jamestown.org/program/embodied-intelligence-the-prcs-whole-of-nation-push-into-robotics/ Description: Executive Summary: Since 2015, Beijing has pursued a whole-of-nation strategy to dominate intelligent robotics, combining vertical integration, policy coordinat... Content: |

|||||

| Alibaba Launches Robotics and Embodied AI - Pandaily | https://pandaily.com/alibaba-launches-r… | 1 | Jan 04, 2026 16:00 | active | |

Alibaba Launches Robotics and Embodied AI - PandailyURL: https://pandaily.com/alibaba-launches-robotics-and-embodied-ai Description: Alibaba Group has set up a dedicated Robotics and Embodied AI team, signaling its entry into the fast-growing race among global tech giants to bring artificial intelligence into the physical world. Content:

Want to read in a language you're more familiar with? Alibaba Group has set up a dedicated Robotics and Embodied AI team, signaling its entry into the fast-growing race among global tech giants to bring artificial intelligence into the physical world. October 8 â Alibaba Group has formed an internal robotics team, signaling its formal entry into the global race among tech giants to build AI-powered physical products. On Wednesday, Lin Junyang, head of technology at Alibabaâs Tongyi Qianwen large model unit, announced on social media platform X that the company has established a âRobotics and Embodied AI Group.â The move highlights Alibabaâs strategic push from software-based AI into hardware and real-world applications. The announcement comes as global peers ramp up investments in robotics. On the same day, Japanâs SoftBank said it would acquire ABBâs industrial robotics business, deepening its footprint in what it calls âphysical AI.â Alibaba Cloud has also made its first foray into embodied intelligence, leading a $140 million funding round last month in Shenzhen-based startup X Square Robot. At the 2025 Yunqi Cloud Summit two weeks ago, Alibaba CEO Wu Yongming projected global AI investment would surge to $4 trillion within five years, stressing that Alibaba must keep pace. In addition to the Â¥380 billion earmarked in February for cloud and AI infrastructure, the company plans further spending. From Multimodal Models to Real-World Agents Lin also noted on X that âmultimodal foundation models are now being transformed into fundamental agents capable of long-horizon reasoning through reinforcement learning, using tools and memory.â He added that such applications âshould naturally move from the virtual world into the physical one.â As head of Tongyi Qianwen, Lin previously worked on multimodal models that process voice, images, and text. The new robotics group underscores Alibabaâs intent to extend its AI expertise into embodied products, aiming for a foothold in the fast-growing embodied AI market. Backing X Square Robot In September, Alibaba Cloud led a $140 million Series A+ round for X Square Robot, marking its first major investment in embodied intelligence. The Shenzhen startup, less than two years old, has raised about $280 million across eight funding rounds. X Square pursues a software-first strategy. Last month it released Wall-OSS, an open-source embodied intelligence foundation model, alongside its Quanta X2 robot. The machine can attach a mop head for 360-degree cleaning and features a robotic hand sensitive enough to detect subtle pressure changesâmoving closer to human-like functionality. The company has not yet launched a consumer product, and pricing will vary by application. Research firm Humanoid Guide estimates its humanoid robot at around $80,000. X Square is already generating revenue from sales to schools, hotels, and elder-care facilities, and is preparing for an IPO next year. COO Yang Qian said the company expects ârobot butlersâ to become a reality within five years, though admitted that AI for robotics still lags behind advances in chatbots and code generation. A Global Robotics Race Alibabaâs entry comes as major tech firms double down on robotics. Venture capital has been pouring into the humanoid robot sector, with widespread belief that combining generative AI with robotics will transform humanâmachine interaction. At NVIDIAâs annual shareholder meeting in June, CEO Jensen Huang said AI and robotics represent two trillion-dollar growth opportunities for the company, predicting self-driving cars will be the first major commercial application. He envisioned billions of robots, hundreds of millions of autonomous vehicles, and tens of thousands of robotic factories powered by NVIDIAâs technology. Meanwhile, SoftBank this week announced a $5.4 billion cash acquisition of ABBâs robotics unit, which generated $2.3 billion in revenue in 2024 and employs about 7,000 people worldwide. Chairman Masayoshi Son described the deal as a step toward fusing âartificial superintelligence with roboticsâ to shape SoftBankâs ânext frontier.â Citigroup estimates the global robotics market could reach $7 trillion by 2050, attracting vast capital inflowsâincluding from state-backed fundsâinto one of technologyâs most hotly contested arenas. Related posts coming soon... Pandaily is a tech media based in Beijing. Our mission is to deliver premium content and contextual insights on China's technology scene to the worldwide tech community. © 2017 - 2026 Pandaily. All rights reserved.

Images (1):

|

|||||

| Venturing into the "Robotics + Artificial Intelligence" Frontier Shenzhen Kingkey … | https://www.manilatimes.net/2025/12/31/… | 0 | Jan 04, 2026 16:00 | active | |

Venturing into the "Robotics + Artificial Intelligence" Frontier Shenzhen Kingkey Smart Agriculture Times Strategically Invests in Huibo RoboticsDescription: HONG KONG, Dec. 31, 2025 /PRNewswire/ -- On the evening of December 30th, Shenzhen Kingkey Smart Agriculture Times (stock code: a000048) announced the formal s... Content: |

|||||

| Fudan University unveils embodied AI institute | http://www.ecns.cn/news/society/2025-04… | 1 | Jan 04, 2026 16:00 | active | |

Fudan University unveils embodied AI instituteURL: http://www.ecns.cn/news/society/2025-04-01/detail-iheqevhn5354677.shtml Content:

Shanghai-based service robotics provider Keenon Robotics unveils its latest humanoid service robot, XMAN-R1, on Monday. (Provided to chinadaily.com.cn) Shanghai-based Fudan University unveiled the Institute of Trustworthy Embodied Artificial Intelligence on Monday, marking the school's strategic move in the field of embodied intelligence and its significant layout facing the world's frontier of science and technology. The institute will be dedicated to advancing cutting-edge research and practical applications in the realm of embodied intelligence, focusing on both fundamental theories and key technological breakthroughs, said Fudan University. By integrating disciplines, such as computer vision, natural language processing, robotics, control systems, and technology ethics, the institute plans to develop intelligent entities with autonomous exploration capabilities, continuous evolutionary traits, and alignment with human values, providing a driving force for future human-machine collaboration and the development of an intelligent society. The institute will leverage interdisciplinary collaboration and industry-academia partnerships to design and build intelligent systems with physical bodies that can interact with the real world securely and reliably, according to Fudan University. During the unveiling event, the university also introduced four joint laboratories established in collaboration with four enterprises. For instance, a joint laboratory with Shanghai Baosight Software Co Ltd will focus on developing intelligent robots capable of withstanding high temperatures and disturbances in steel plants, enabling them to perform complex production operations effectively. Also on Monday, Shanghai-based service robotics provider Keenon Robotics unveiled its latest humanoid service robot, XMAN-R1. Leveraging vast real-world data, the company aimed to foster a collaborative ecosystem with a diverse range of humanoid service robots. Designed with the principles of specialization, affability and safety, XMAN-R1 is tailored to fit seamlessly into the service industry scenarios that Keenon Robotics specializes in. XMAN-R1 is currently capable of completing tasks from taking orders, food preparation, delivery to collection, with plans to expand to more diverse settings, said the company which was founded in 2010. Mimicking the movement logic and postures of service personnel, XMAN-R1 designed with human body proportions can hand over items to customers and collaborate with the company's delivery and cleaning robots, adapting to the specific requirements of each role. It is also equipped with a large language model and expression feedback for human-like interactions to enhance affinity for service. Keenon Robotics has been dedicated to diverse service scenarios for 15 years, deploying over 100,000 specialized robots for delivery, cleaning, and other functions, in more than 600 cities and regions across 60 countries. Fudan University opens research center for Lancang-Mekong youth Fudan's AI regulations stir controversy Fudan University releases new HBV study

Images (1):

|

|||||

| KraneShares Global Humanoid & Embodied Intelligence Index UCITS ETF (KOID) … | https://www.manilatimes.net/2025/10/09/… | 0 | Jan 04, 2026 16:00 | active | |

KraneShares Global Humanoid & Embodied Intelligence Index UCITS ETF (KOID) Launches on the London Stock ExchangeDescription: LONDON, Oct. 09, 2025 (GLOBE NEWSWIRE) -- KraneShares, a global asset manager known for its innovative exchange-traded funds (ETFs), today announced the launch ... Content: |

|||||

| What are physical AI and embodied AI? The robots know … | https://www.fastcompany.com/91363903/fo… | 1 | Jan 04, 2026 16:00 | active | |

What are physical AI and embodied AI? The robots know - Fast CompanyDescription: Physical AI and Embodied AI, which allow bots to understand and navigate the real world—are powering the robot revolution. Content:

LOGIN 07-19-2025TECH Physical AI and Embodied AI, which allow bots to understand and navigate the real world—are powering the robot revolution. [Photo: Amazon] BY Michael Grothaus Amazon recently announced that it had deployed its one-millionth robot across its workforce since rolling out its first bot in 2012. The figure is astounding from a sheer numbers perspective, especially considering that we’re talking about just one company. The one million bot number is all the more striking, though, since it took Amazon merely about a dozen years to achieve. It took the company nearly 30 years to build its current workforce of 1.5 million humans. At this rate, Amazon could soon “employ” more bots than people. Other companies are likely to follow suit, and not just in factories. Robots will be increasingly deployed in a wide range of traditional blue-collar roles, including delivery, construction, and agriculture, as well as in white-collar spaces like retail and food services. This occupational versatility will not only stem from their physical designs—joints, gyroscopes, and motors—but also from the two burgeoning fields of artificial intelligence that power their “brains”: Physical AI and Embodied AI. Here’s what you need to understand about each and how they differ from the generative AI that powers chatbots like ChatGPT. Physical AI refers to artificial intelligence that understands the physical properties of the real world and how these properties interact. As artificial intelligence leader Nvidia explains it, Physical AI is also known as “generative physical AI” because it can analyze data about physical processes and generate insights or recommendations for actions that a person, government, or machine should take. In other words, Physical AI can reason about the physical world. This real-world reasoning ability has numerous applications. A Physical AI system receiving data from a rain sensor may be able to predict if a certain location will flood. It can make these predictions by reasoning about real-time weather data using its understanding of the physical properties of fluid dynamics, such as how water is absorbed or repelled by specific landscape features. Physical AI can also be used to build digital twins of environments and spaces, from an individual factory to an entire city. It can help determine the optimal floor placement for heavy manufacturing equipment, for example, by understanding the building’s physical characteristics, such as the weight capacity of each floor based on its material composition. Or it can improve urban planning by analyzing things like traffic flows, how trees impact heat retention on streets, and how building heights affect sunlight distribution in neighborhoods. Embodied AI refers to artificial intelligence that “lives” inside (“embodies”) a physical vessel that can move around and physically interact with the real world. Embodied AI can inhabit various objects, including smart vacuum cleaners, humanoid robots, and self-driving cars. Fast Company & Inc © 2026 Mansueto Ventures, LLC Fastcompany.com adheres to NewsGuard’s nine standards of credibility and transparency. Learn More

Images (1):

|

|||||

| Accelerating the Evolution of Automotive Embodied Intelligence, Geely Auto Group … | https://www.manilatimes.net/2025/07/31/… | 0 | Jan 04, 2026 16:00 | active | |

Accelerating the Evolution of Automotive Embodied Intelligence, Geely Auto Group Teams Up with StepFun for a Joint Showcase at the 2025 World Artificial Intelligence ConferenceDescription: Hangzhou, China, July 30, 2025 (GLOBE NEWSWIRE) -- On July 26, Geely Auto Group partnered with its strategic tech ecosystem partner, StepFun, to jointly exhib... Content: |

|||||

| KraneShares Launches First Global Humanoid & Embodied Intelligence ETF (Ticker: … | https://markets.businessinsider.com/new… | 1 | Jan 04, 2026 16:00 | active | |

KraneShares Launches First Global Humanoid & Embodied Intelligence ETF (Ticker: KOID) On Nasdaq | Markets InsiderDescription: NEW YORK, June 05, 2025 (GLOBE NEWSWIRE) -- Krane Funds Advisors, LLC (“KraneShares”), an asset management firm known for its global exchange-tr... Content:

NEW YORK, June 05, 2025 (GLOBE NEWSWIRE) -- Krane Funds Advisors, LLC (“KraneShares”), an asset management firm known for its global exchange-traded funds (ETFs), announced the launch of the KraneShares Global Humanoid and Embodied Intelligence Index ETF (Ticker: KOID). KOID represents the first US-listed thematic equity ETF that captures the global humanoid opportunity.1 Thanks to breakthroughs in Artificial Intelligence (AI), machine learning, advanced materials, and robotics manufacturing, commercial and retail applications of humanoid robotics and embodied intelligence are now a reality. Humanoid robots—including Tesla’s Optimus, Figure AI, and Unitree—are already demonstrating impressive performance in human tasks, including in both factory and home settings. The Morgan Stanley Global Humanoid Model projects there could be 1 billion humanoids and $5 trillion in annual revenue by 2050.2 KOID seeks to capture the global humanoid and embodied intelligence ecosystem, which refers to AI systems integrated into physical machines that can sense, learn, and interact with the real world. Humanoid robotics, a key subset of embodied intelligence, focuses on robots with human-like forms and capabilities designed to work seamlessly in environments built for people, like factories, hospitals, and homes. The acceleration of bringing robots to the commercial and retail markets stems from the need to address urgent global challenges like labor shortages, aging populations, and greater efficiency and safety across industries. “Soon, the cost of a humanoid robot could be less than a car3,” said KraneShares Senior Investment Strategist Derek Yan, CFA. “We see compelling investment opportunities among the humanoid enablers and supply-chain partners that will bring humanoid robots into our daily lives at scale." Unlike legacy robotics‐focused ETFs, KOID focuses exclusively on humanoid robotics and embodied AI, positioning itself at the forefront of the next generation of robotics innovation. KOID aims to capture the full spectrum of enabling technologies that form the foundation of humanoid development, including humanoid integration & manufacturing, mechanical systems, sensing & perception, actuation systems (the “muscle” of the robot), semiconductors & technology, and critical materials. KOID offers global exposure to companies based primarily in the United States, China, and Japan within the information technology, industrial, and consumer discretionary sectors. “We are excited to bring the Humanoid opportunity to global investors through KOID, the latest addition to our suite of innovative global thematic ETFs,” said KraneShares CEO Jonathan Krane. “At KraneShares, our core goal is to launch strategies like KOID to capture emerging megatrends, giving our clients access to powerful growth opportunities as they accelerate.” The KOID ETF will track the MerQube Global Humanoid and Embodied Intelligence Index, which is designed to capture the performance of companies engaged in humanoid and embodied intelligence-related business. For more information on the KraneShares Global Humanoid and Embodied Intelligence Index ETF (Ticker: KOID), please visit https://kraneshares.com/koid or consult your financial advisor. About KraneShares KraneShares is a specialist investment manager focused on China, Climate, and Alternatives. KraneShares seeks to provide innovative, high-conviction, and first-to-market strategies based on the firm and its partners' deep investing knowledge. KraneShares identifies and delivers groundbreaking capital market opportunities and believes investors should have cost-effective and transparent tools for attaining exposure to various asset classes. The firm was founded in 2013 and serves institutions and financial professionals globally. The firm is a signatory of the United Nations-supported Principles for Responsible Investment (UN PRI). Citations: Carefully consider the Funds’ investment objectives, risk factors, charges and expenses before investing. This and additional information can be found in the Funds' full and summary prospectus, which may be obtained by visiting https:// kraneshares.com/koid . Read the prospectus carefully before investing. Risk Disclosures: Investing involves risk, including possible loss of principal. There can be no assurance that a Fund will achieve its stated objectives. Indices are unmanaged and do not include the effect of fees. One cannot invest directly in an index. This information should not be relied upon as research, investment advice, or a recommendation regarding any products, strategies, or any security in particular. This material is strictly for illustrative, educational, or informational purposes and is subject to change. Certain content represents an assessment of the market environment at a specific time and is not intended to be a forecast of future events or a guarantee of future results; material is as of the dates noted and is subject to change without notice. Humanoid and embedded intelligence technology companies often face high research and capital costs, resulting in variable profitability in a competitive market where products can quickly become obsolete. Their reliance on intellectual property makes them vulnerable to losses, while legal and regulatory changes can impact profitability. Defining these companies can be complex, and some may risk commercial failure. They are also affected by global scientific developments, leading to rapid obsolescence, and may be subject to government regulations. Many companies in which the Fund invests may not currently be profitable, with no guarantee of future success. A-Shares are issued by companies in mainland China and traded on local exchanges. They are available to domestic and certain foreign investors, including QFIs and those participating in Stock Connect Programs like Shanghai-Hong Kong and Shenzhen-Hong Kong. Foreign investments in A-Shares face various regulations and restrictions, including limits on asset repatriation. A-Shares may experience frequent trading halts and illiquidity, which can lead to volatility in the Fund’s share price and increased trading halt risks. The Chinese economy is an emerging market, vulnerable to domestic and regional economic and political changes, often showing more volatility than developed markets. Companies face risks from potential government interventions, and the export-driven economy is sensitive to downturns in key trading partners, impacting the Fund. U.S.-China tensions raise concerns over tariffs and trade restrictions, which could harm China’s exports and the Fund. China’s regulatory standards are less stringent than in the U.S., resulting in limited information about issuers. Tax laws are unclear and subject to change, potentially impacting the Fund and leading to unexpected liabilities for foreign investors. Fluctuations in currency of foreign countries may have an adverse effect to domestic currency values. The Japanese economy depends heavily on international trade and is vulnerable to economic, political, and social instability, which could affect the Fund. The yen is volatile, influenced by fluctuations in Asia, and has historically shown unpredictable movements against the U.S. dollar. Natural disasters, such as earthquakes and tidal waves, also pose risks. Furthermore, government intervention and an unstable financial services sector can negatively impact the economy, which relies significantly on trade with developing nations in East and Southeast Asia. The Fund invests in non-U.S. securities, which can be less liquid and subject to weaker regulatory oversight compared to U.S. securities. Risks include currency fluctuations, political or economic instability, incomplete financial disclosure, and potential taxes or nationalization of holdings. Foreign trading hours and settlement processes may also limit the Fund’s ability to trade, and different accounting standards can add complexity. Suspensions of foreign securities may adversely impact the Fund, and delays in settlement or holidays may hinder asset liquidation, increasing the risk of loss. The Fund may invest in derivatives, which are often more volatile than other investments and may magnify the Fund’s gains or losses. A derivative (i.e., futures/forward contracts, swaps, and options) is a contract that derives its value from the performance of an underlying asset. The primary risk of derivatives is that changes in the asset’s market value and the derivative may not be proportionate, and some derivatives can have the potential for unlimited losses. Derivatives are also subject to liquidity and counterparty risk. The Fund is subject to liquidity risk, meaning that certain investments may become difficult to purchase or sell at a reasonable time and price. If a transaction for these securities is large, it may not be possible to initiate, which may cause the Fund to suffer losses. Counterparty risk is the risk of loss in the event that the counterparty to an agreement fails to make required payments or otherwise comply with the terms of the derivative. Large capitalization companies may struggle to adapt fast, impacting their growth compared to smaller firms, especially in expansive times. This could result in lower stock returns than investing in smaller and mid-sized companies. In addition to the normal risks associated with investing, investments in smaller companies typically exhibit higher volatility. A large number of shares of the Fund is held by a single shareholder or a small group of shareholders. Redemptions from these shareholder can harm Fund performance, especially in declining markets, leading to forced sales at disadvantageous prices, increased costs, and adverse tax effects for remaining shareholders. The Fund is new and does not yet have a significant number of shares outstanding. If the Fund does not grow in size, it will be at greater risk than larger funds of wider bid-ask spreads for its shares, trading at a greater premium or discount to NAV, liquidation and/or a trading halt. Narrowly focused investments typically exhibit higher volatility. The Fund’s assets are expected to be concentrated in a sector, industry, market, or group of concentrations to the extent that the Underlying Index has such concentrations. The securities or futures in that concentration could react similarly to market developments. Thus, the Fund is subject to loss due to adverse occurrences that affect that concentration. KOID is non-diversified. Neither MerQube, Inc. nor any of its affiliates (collectively, “MerQube”) is the issuer or producer of KOID and MerQube has no duties, responsibilities, or obligations to investors in KOID. The index underlying the KOID is a product of MerQube and has been licensed for use by Krane Funds Advisors, LLC and its affiliates. Such index is calculated using, among other things, market data or other information (“Input Data”) from one or more sources (each such source, a “Data Provider”). MerQube® is a registered trademark of MerQube, Inc. These trademarks have been licensed for certain purposes by Krane Funds Advisors, LLC and its affiliates in its capacity as the issuer of the KOID. KOID is not sponsored, endorsed, sold or promoted by MerQube, any Data Provider, or any other third party, and none of such parties make any representation regarding the advisability of investing in securities generally or in KOID particularly, nor do they have any liability for any errors, omissions, or interruptions of the Input Data, MerQube Global Humanoid and Embodied Intelligence Index, or any associated data. Neither MerQube nor the Data Providers make any representation or warranty, express or implied, to the owners of the shares of KOID or to any member of the public, of any kind, including regarding the ability of the MerQube Global Humanoid and Embodied Intelligence Index to track market performance or any asset class. The MerQube Global Humanoid and Embodied Intelligence Index is determined, composed and calculated by MerQube without regard to Krane Funds Advisors, LLC and its affiliates or the KOID. MerQube and Data Providers have no obligation to take the needs of Krane Funds Advisors, LLC and its affiliates or the owners of KOID into consideration in determining, composing or calculating the MerQube Global Humanoid and Embodied Intelligence Index. Neither MerQube nor any Data Provider is responsible for and have not participated in the determination of the prices or amount of KOID or the timing of the issuance or sale of KOID or in the determination or calculation of the equation by which KOID is to be converted into cash, surrendered or redeemed, as the case may be. MerQube and Data Providers have no obligation or liability in connection with the administration, marketing or trading of KOID. There is no assurance that investment products based on the MerQube Global Humanoid and Embodied Intelligence Index will accurately track index performance or provide positive investment returns. MerQube is not an investment advisor. Inclusion of a security within an index is not a recommendation by MerQube to buy, sell, or hold such security, nor is it considered to be investment advice. NEITHER MERQUBE NOR ANY OTHER DATA PROVIDER GUARANTEES THE ADEQUACY, ACCURACY, TIMELINESS AND/OR THE COMPLETENESS OF THE MERQUBE GLOBAL HUMANOID AND EMBODIED INTELLIGENCE INDEX OR ANY DATA RELATED THERETO (INCLUDING DATA INPUTS) OR ANY COMMUNICATION WITH RESPECT THERETO. NEITHER MERQUBE NOR ANY OTHER DATA PROVIDERS SHALL BE SUBJECT TO ANY DAMAGES OR LIABILITY FOR ANY ERRORS, OMISSIONS, OR DELAYS THEREIN. MERQUBE AND ITS DATA PROVIDERS MAKE NO EXPRESS OR IMPLIED WARRANTIES, AND THEY EXPRESSLY DISCLAIM ALL WARRANTIES, OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE OR USE OR AS TO RESULTS TO BE OBTAINED BY KRANE FUNDS ADVISORS, LLC AND ITS AFFILIATES, OWNERS OF THE KOID, OR ANY OTHER PERSON OR ENTITY FROM THE USE OF THE MERQUBE GLOBAL HUMANOID AND EMBODIED INTELLIGENCE INDEX OR WITH RESPECT TO ANY DATA RELATED THERETO. WITHOUT LIMITING ANY OF THE FOREGOING, IN NO EVENT WHATSOEVER SHALL MERQUBE OR DATA PROVIDERS BE LIABLE FOR ANY INDIRECT, SPECIAL, INCIDENTAL, PUNITIVE, OR CONSEQUENTIAL DAMAGES INCLUDING BUT NOT LIMITED TO, LOSS OF PROFITS, TRADING LOSSES, LOST TIME OR GOODWILL, EVEN IF THEY HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES, WHETHER IN CONTRACT, TORT, STRICT LIABILITY, OR OTHERWISE. THE FOREGOING REFERENCES TO “MERQUBE” AND/OR “DATA PROVIDER” SHALL BE CONSTRUED TO INCLUDE ANY AND ALL SERVICE PROVIDERS, CONTRACTORS, EMPLOYEES, AGENTS, AND AUTHORIZED REPRESENTATIVES OF THE REFERENCED PARTY. ETF shares are bought and sold on an exchange at market price (not NAV) and are not individually redeemed from the Fund. However, shares may be redeemed at NAV directly by certain authorized broker-dealers (Authorized Participants) in very large creation/redemption units. The returns shown do not represent the returns you would receive if you traded shares at other times. Shares may trade at a premium or discount to their NAV in the secondary market. Brokerage commissions will reduce returns. Beginning 12/23/2020, market price returns are based on the official closing price of an ETF share or, if the official closing price isn't available, the midpoint between the national best bid and national best offer ("NBBO") as of the time the ETF calculates the current NAV per share. Prior to that date, market price returns were based on the midpoint between the Bid and Ask price. NAVs are calculated using prices as of 4:00 PM Eastern Time. The KraneShares ETFs and KFA Funds ETFs are distributed by SEI Investments Distribution Company (SIDCO), 1 Freedom Valley Drive, Oaks, PA 19456, which is not affiliated with Krane Funds Advisors, LLC, the Investment Adviser for the Funds, or any sub-advisers for the Funds. Copyright © 2025 Insider Inc and finanzen.net GmbH (Imprint). All rights reserved. Registration on or use of this site constitutes acceptance of our Terms of Service and Privacy Policy.

Images (1):

|

|||||

| Dreame’s new floor washers show embodied intelligence entering homes first … | https://kr-asia.com/dreames-new-floor-w… | 1 | Jan 04, 2026 16:00 | active | |

Dreame’s new floor washers show embodied intelligence entering homes first through appliancesDescription: While humanoid robots remain the holy grail, companies like Dreame are tapping AI to enhance a wide range of household devices. Content:

Written by 36Kr English Published on 16 Sep 2025 5 mins read When competition over specifications in home appliances reaches a bottleneck, artificial intelligence may be the factor that breaks the deadlock while making products more user-friendly. At its latest launch event, Dreame introduced more than 30 new products, with two floor washers drawing the most attention. Each features a pair of robotic arms that can self-clean edges and scrub floors as users push the machine. “In recent years, the floor washer segment has focused too much on pushing parameters to the extreme, but that doesn’t necessarily solve users’ real pain points, such as stubborn stains or cleaning low spaces,” said Wang Hongpin, head of product and R&D for Dreame’s floor washer division in China. Increasing suction power does not always improve cleaning results. Higher power can also create more noise and shorten battery life. By contrast, AI introduces new functionality through environmental sensing, user intent recognition, and action decision-making, enabling floor washers to address problems that were previously unresolved. This may represent a stepping stone. Before humanoid robots enter households, AI-enabled appliances with basic embodied intelligence are already easing housework. The concept extends beyond floor washers. Dreame’s new lineup now includes refrigerators, air conditioners, and televisions, signaling its ambition to expand beyond cleaning devices and position itself as a full-fledged home appliance company. From large appliances such as refrigerators to smaller items like hair dryers and smart rings, AI integration is a recurring theme. Dreame also disclosed for the first time that it plans to launch its own smart glasses and is exploring companion robots. Pan Zhidong, head of Dreame’s AI smart hardware division, said in an interview that the company intends to use smart rings and glasses as entry points to connect all its products. According to his vision, Dreame’s smart home ecosystem will expand outward from cleaning appliances to automotive applications, with AI-driven hardware serving more aspects of daily life. The clearest examples of Dreame’s AI integration are the two new floor washers with robotic arms. The T60 Ultra and H60 Ultra each feature two robotic arms designed for scrubbing. The front arm uses a flexible scraper to clear watermarks and dirt along edges, while the rear arm applies pressure to tackle stubborn stains. As users push the washer forward, the AI-controlled arms perform in tandem. One acts more softly than the other, like a pair of helping hands. This directly addresses pain points that traditional floor washers have long left unresolved. In recent years, the industry has been locked in a race to maximize specifications—stronger suction, higher water output, more powerful motors. Yet these boosts have had limited effect on edge cleaning and narrow gaps. To address this, the T60 and H60 incorporate embodied intelligence. Under AI control, the robotic arms can sense the environment and make real-time decisions. The washers detect floor dirt levels through high-precision sensors and magnetic rings. Once stains are identified, AI adjusts suction and water output. If stains prove difficult to remove, the machine alerts users with lights and sounds, suggesting manual use of steam or hot water modes. Acting as the “brain,” the AI system orchestrates the machine’s actions, controlling arm movements based on whether the device is advancing or reversing. “The floor washer market is in a fiercely competitive state, with each player choosing its own angle. Dreame hopes to solve user pain points more effectively through embodied intelligence,” Wang said. He added that the goal is to give machines more efficient and thorough cleaning capabilities, while also making them flexible enough to reach under furniture and scrub tough stains. The AI-first approach extends to other Dreame products. Its new hair dryer can detect its distance from hair and automatically adjust airflow and temperature. The company’s latest refrigerator uses built-in models to monitor and regulate oxygen levels, while also sterilizing compartments to preserve freshness. Another highlight is Dreame’s smart ring, which serves as a control point for smart home devices and tracks health metrics. It is designed to connect with Dreame’s upcoming car and other outdoor devices as well. Underlying these products is a consistent logic: as traditional hardware upgrades in home appliances yield diminishing returns, AI can create new space for innovation. According to All View Cloud (AVC), the market for cleaning appliances such as floor washers and robot vacuums has been expanding rapidly. Cumulative sales reached RMB 22.4 billion (USD 3.1 billion), up 30% year-on-year (YoY), with unit sales of 16.55 million, an increase of 22.1%. Growing sales have attracted more entrants. On August 6, 2025, DJI released its Romo robot vacuum, marking its crossover into smart home cleaning and further intensifying competition. Yet profit growth has not kept pace with sales. In 2024, Ecovacs Robotics reported net profit of RMB 806 million (USD 112.8 million), less than half its 2021 peak. Roborock’s 2024 revenue rose 38% YoY, but its net profit slipped 3.6%. Both companies attributed pressure on earnings to price wars and heightened competition. Some in the industry see AI as a potential way out of this cycle. Earlier this year, Roborock introduced what it described as the world’s first mass-produced bionic hand vacuum cleaner, which can sweep floors while also picking up small items. AI enables the device to recognize objects and calculate the best way to grasp them, improving collection accuracy. Dreame’s floor washers follow a similar principle, identifying stains with AI and adapting cleaning modes on the fly. Whether it is a vacuum that automatically lends a hand when it senses dirt or a hair dryer that adjusts heat based on hair condition, today’s AI appliances are not trying to mimic humanoid robots. Instead, they apply embodied intelligence in targeted ways to solve specific household tasks. This could mark a transitional stage for embodied intelligence, offering a practical route for deployment in everyday life. Wang believes advances in smart technology will expand opportunities for floor washers. “With embodied intelligence and robotic arms, floor washers now have eyes, a brain, and hands. In the future, consumers may only need to push the machine casually around their homes, while it autonomously adjusts to different scenarios and completes the job,” he said. Beyond its latest launches, Dreame plans to release smart glasses in the first quarter of 2026, along with companion robots, though details have not yet been confirmed. KrASIA Connection features translated and adapted content that was originally published by 36Kr. This article was written by Fu Chong for 36Kr. Loading... Subscribe to our newsletters KrASIA A digital media company reporting on China's tech and business pulse.

Images (1):

|

|||||

| Title: The Future of Artificial Intelligence in 2025: Trends, Challenges … | https://medium.com/@mashirosenpai0/titl… | 0 | Jan 04, 2026 16:00 | active | |

Title: The Future of Artificial Intelligence in 2025: Trends, Challenges & OpportunitiesDescription: Title: The Future of Artificial Intelligence in 2025: Trends, Challenges & Opportunities Meta Description: Explore the latest trends and challenges of Artificia... Content: |

|||||

| The Future is Here: Embodied Intelligent Robots | https://www.manilatimes.net/2025/07/30/… | 0 | Jan 04, 2026 16:00 | active | |

The Future is Here: Embodied Intelligent RobotsDescription: BEIJING, July 30, 2025 /PRNewswire/ -- A news report from en.qstheory.cn: Content: |

|||||

| World's largest embodied AI data factory opens in Tianjin | http://www.ecns.cn/news/cns-wire/2025-0… | 1 | Jan 04, 2026 16:00 | active | |

World's largest embodied AI data factory opens in TianjinURL: http://www.ecns.cn/news/cns-wire/2025-06-24/detail-ihestqxv5318579.shtml Content:

(ECNS) -- The world's largest embodied artificial intelligence data facility, Pacini Perception Technology's Super Embodied Intelligence Data (Super EID) Factory, officially opened in Tianjin Municipality on Tuesday. Spanning 12,000 square meters, the facility is the world's leading base for embodied AI data collection and model training. Equipped with 150 data units developed in-house, it is expected to produce nearly 200 million high-quality AI training samples annually. The base features a "15+N" full-scenario matrix system encompassing thousands of task scenarios across automotive manufacturing, 3C (computer, communication, and consumer electronics) product assembly, household, office, and food service environments. Xu Jincheng, Pacini's CEO and founder, explained that the facility's core technology utilizes synchronized high-precision capture of human hand movements combined with visual-tactile modality alignment. This means the data samples combine 3D vision and touch-sensing, allowing robots to better mimic human interaction. The approach overcomes traditional robotics-dependent data collection limitations and dramatically improves data versatility, Xu said. The facility will not only serve as a data hub but also evolve into an innovation engine for the embodied AI industry, the CEO added. China has produced nearly 100 embodied AI robotic products since 2024, capturing 70% of the global market, according to data released by the Ministry of Industry and Information Technology in April. According to a report from Head Leopard Shanghai Research Institute, China's embodied AI market size reached 418.6 billion yuan ($58 billion) in 2023 and is expected to reach 632.8 billion yuan by 2027, driven by breakthroughs in AI technology, the Securities Daily reported. (By Zhang Dongfang)

Images (1):

|

|||||

| SenseTime deepens its push into embodied intelligence with ACE Robotics | https://kr-asia.com/sensetime-deepens-i… | 1 | Jan 04, 2026 16:00 | active | |

SenseTime deepens its push into embodied intelligence with ACE RoboticsURL: https://kr-asia.com/sensetime-deepens-its-push-into-embodied-intelligence-with-ace-robotics Description: Wang Xiaogang explains what the new robotics venture means for SenseTime’s AI plans. Content: